When developing a solution or capability, it is usually good to make it as simple as possible — but no simpler. The key to walking the fine line between too simple and too complex (or too heavy or too expensive or whatever) is to approach problems in an organized and consistent way. This increases the chance of finding the appropriate solution not only in terms of complexity and weight, but also in terms of accuracy and correctness. You don’t want a solution that doesn’t do everything you need, but you don’t want to spend a hundred thousand dollars for a twenty-thousand-dollar problem, either.

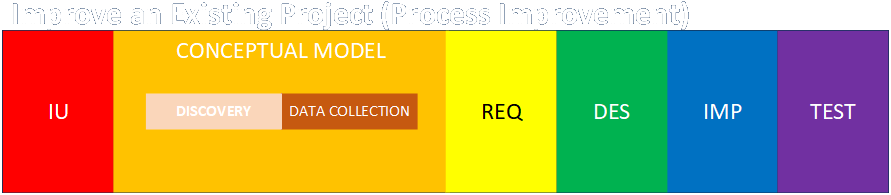

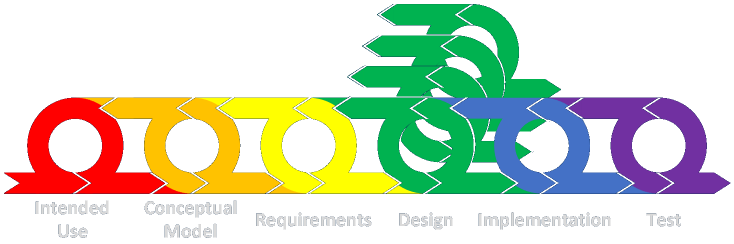

Different problems can come up if you skip different steps in the process, or if you do them incorrectly or incompletely. The steps are as follows:

Intended Use (or Problem Statement): Defining the problem to be solved

Conceptual Model (including Discovery and Data Collection): Understanding the current situation in detail (describing the As-Is state)

Requirements: Identifying the specific functional and non-functional needs of the organization, users, customers, and solution (describing the abstract To-Be state)

Design: Describing a proposed solution, or the best among many possibilities (describing the concrete To-Be state)

Implementation: Creating and deploying the chosen design

Test and Acceptance (Verification and Validation): Making sure the solution works correctly as intended, and that it actually addresses the intended use

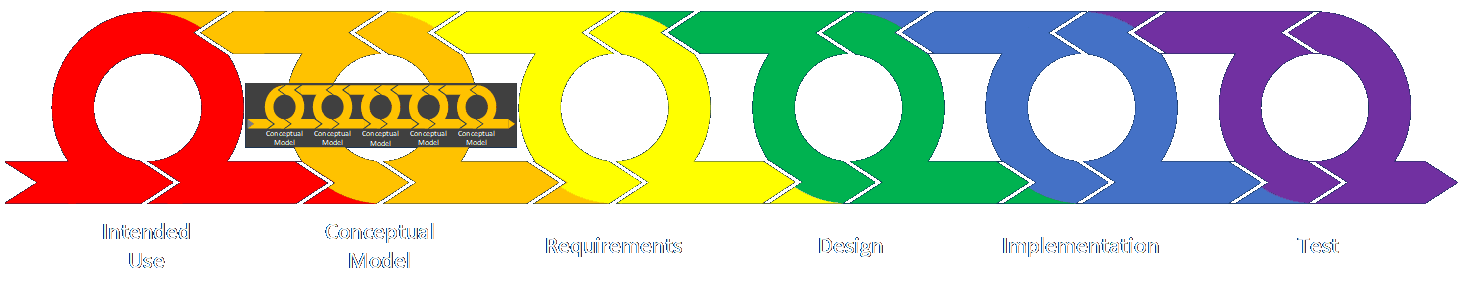

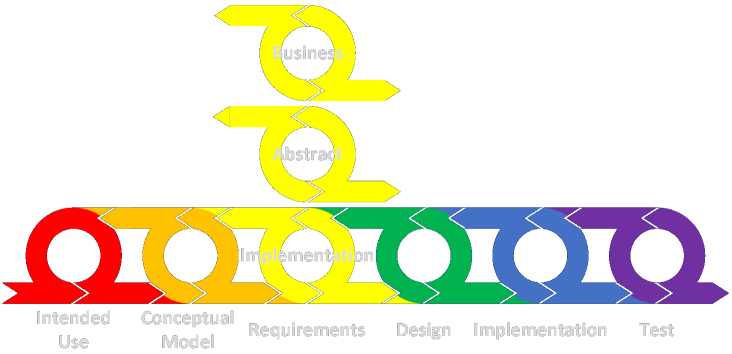

The phases are shown below to reflect that iteration, review, and feedback occur within each phase, in order to ensure everything is properly understood and agreed to by the relevant participants and stakeholders, and also between phases, as work in any particular phase can improve understanding of the overall problem and lead to modifications in other phases. I write about this elsewhere here and here, just for starters. I also describe how the phases are conducted in different management contexts here. For this discussion, pay particular attention to the difference between when the conceptual modeling work is done in a project involving a change to an existing situation vs. when a team is creating something entirely new. That said, most efforts within organizations are likely to involve changes or additions to what’s already there, so the descriptions that follow will assume that.

More pithily stated, the process is: define the problem, figure out what’s going on now, figure out what’s needed and by whom in some detail, propose solutions and choose one, implement and deploy the solution, and test it. Different problems arise when errors and omissions are made in different phases. Let’s look at these in order.

All items identified in each phase of a project or engagement should map to one or more items in both the previous and subsequent phases. This ensures that all items address the originally identified business need(s), that all elements of the existing system are considered, that all participants’ needs are met, that the design addresses all needs, that the implementation encompasses all elements of the design, and that all elements of the implementation (and deployment) are provisioned, tested and accepted. Equally as important, tracking items in this way ensures that effort is not directed to doing extra analysis, implementation, and testing for items that aren’t needed, because they don’t address the business need.

The tool for doing this is a Requirements Traceability Matrix. This article discusses how it is used to trace and link items across all the phases. However, there is another way to think about the traceability and mapping of requirements and other items, and that is in any form of logical, possibly hierarchical model that ensures all elements of the solution are considered as a unified and logical whole.

Finally, while this framework seemingly includes a lot of steps and descriptive verbiage, at root it’s ultimately pretty simple, and the same rules apply for managing and conceiving the work as for the solution. In the end, the process used should be as simple as possible — but no simpler. The key is to use a consistent and organized approach that maintains and enhances communication, situational awareness, engagement, and the appropriate level to detail.

Intended Use

The simplest thing to say here is that if you don’t identify the problem correctly, you probably aren’t going to solve it. The iterative nature of the framework does allow the problem definition to be modified as work proceeds, so even if the problem isn’t defined exactly right at the beginning, it can be redefined and made correct as investigations proceed. For this exercise, however, let’s assume we do know what we’re trying to accomplish.

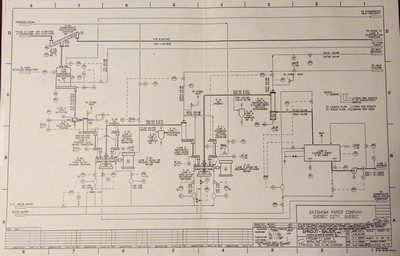

Conceptual Model

This mostly involves figuring out what’s going on now, in the proper amount of detail. If you omit this step, or if you do not complete it with sufficient thoroughness and accuracy, you may encounter the following problems:

- incomplete understanding of current processes, causing you to leave things out of your analysis and solution, and not solve problems users may be having

- incomplete understanding of current processes, causing you to “re-invent the wheel” by reimplementing capabilities you already have

- incomplete understanding of data, so you don’t understand the completeness, quality, or usability of your data for different purposes (see here, here, and here)

- incomplete understanding of interfaces and communication channels (including human-human, human-system, system-system, human-environment, system-environment, human-process_item, system-process_item, where a process_item could be a document, a package, a vehicle, an item being manufactured or assembled or a component thereof; and all of the foregoing can also be in your own organization or external organizations), so you don’t know who’s talking to who and why

- incomplete understanding of different kinds of queues and storage pools, causing incorrect analysis or design of solutions

- incomplete understanding of extant security measures and possible threats, leading to a range of potential vulnerabilities

- incomplete understanding of potential solutions, which may inhibit alertness to things you could be paying attention to during discovery and data collection that may be germane

- incomplete understanding of all of the above, causing potential lack of awareness of scope and scale of operations

Requirements

This involves learning what the users, customers, and organization(s) need, and even what the solution needs. This step can even include identifying methodological requirements concerning how the various steps are performed in each phase. Functional requirements describe what the solution does. Non-functional requirements describe what the solution is.

Skipping all or part of this step can lead to these problems:

- not talking to all of the users can cause you to miss problems like ease of use, intuitiveness of the system, opportunities for streamlining operations, the difficulty or outright inability to fix mistakes, the time and complexity of tasks, design that promotes making errors instead of preventing them, and more.

- not talking to all of the operators and maintainers can cause you to miss difficulties with documentation, modifying or repairing the system, performing backups, generating reports, diagnosing problems, restarting the system, applying updates and patches, arranging failovers and disaster recovery, notifying users of various conditions, and more.

- not talking to the owners of external systems and organizations with whom you interact can cause you to miss communication errors, data and timing mismatches, changes to external operations, and the like.

- not talking to vendors can cause you to miss problems like identified errors and faults, updates, recalls, updated usage guidance, end-of-support notices, training, and so on

- not talking to implementation and deployment SMEs can cause you to miss problems like solution requirements, deployment needs, implementability, possibilities for modularity and reuse, availability of teams and resources, fitness of use for different tools and techniques, inadequate robustness, insufficient bandwidth or storage or other capability, compatibility with different hardware and software and OS environments, and more.

- not talking to UI/UX and other designers can cause problems with usability, consistent look and feel, branding, disability access, testing, and so on.

- not talking to customers can cause you to miss problems like ease of use, reluctance to use new features or otherwise change, fears about security, concerns about price changes, perceived long-term viability of the organization, overall preferences and use trends, and others

Design

Per the beginning of this post, solutions should be as simple as possible, but no simpler. Choices among many possibilities must consider many factors, all of which can be analyzed in a tradespace with all other factors. In one case, you may choose a dashboard. In another case a monthly report may suffice. A quick and dirty macro may serve the purpose in other cases. A heavier and more expensive capability may be used where a simpler solution could work, if it is already owned and many experienced analysts and implementers are readily available, in order to be consistent, but a new capability might not be adopted if a simpler one will do the job and be cheaper, more approachable, and maintainable.

There are no hard-and-fast rules for making these judgments. Each organization must apply business acumen to its own situations as they arise. As described above, the right effort should be applied to the problem to generate the right solution.

Implementation

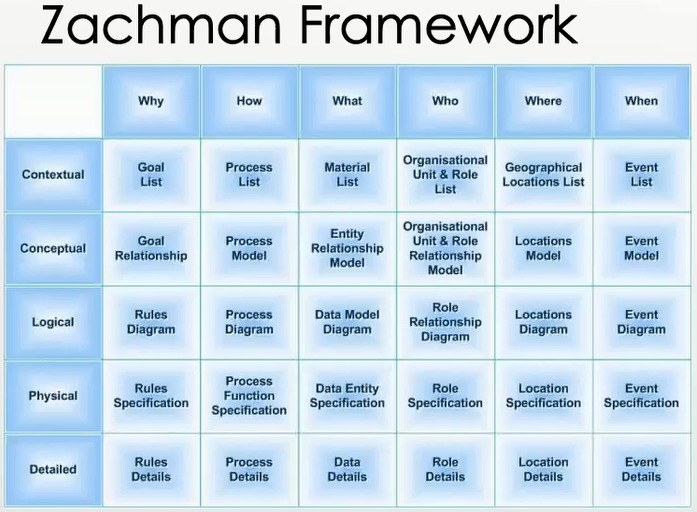

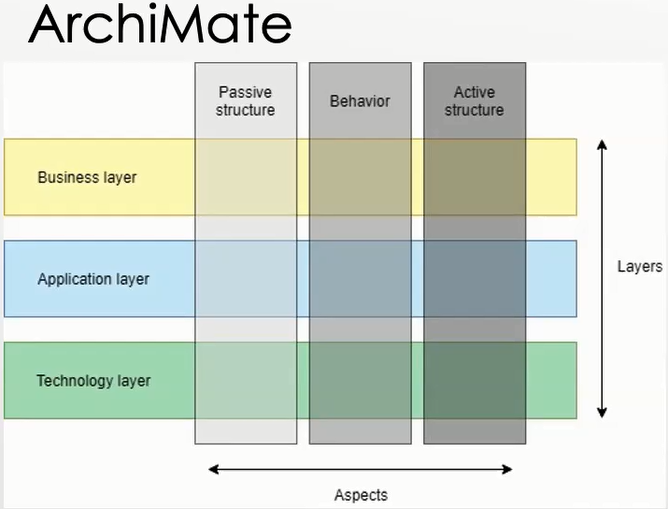

Work in all phases of every engagement or project must proceed on three levels, as described here. The top layer considers the organization, its sub-units and departments and functions and locations, and its people. The bottom layer is the hardware and software that make the process run. This is where the technology and other physical plant comes into play. An assembly line or the equipment and building that make up a coffee shop count in this layer.

The middle layer is the abstract or application layer, and that is where the actions, decisions, calculations, governing rules, and data are described that logically drive the organization’s process. The data and operations so identified and described marry the organization and its people to whatever physical implementations are needed.

The implementation phase can involve an almost limitless number of considerations, levels, and components, all of which have to be constructed, tested, and deployed, possibly in multiple phases depending on the scope and scale of the solution being developed. Solutions must sometimes be rolled out in phases, but small or otherwise incremental capabilities can be deployed all at once. The key is to do the implementation and deployment in a way that makes sense for the solution.

If the design of the abstract, middle layer is defined correctly, this will minimize potential problems with the implementation and deployment. Moreover, if the implementation and deployment SMEs are involved from the beginning, depending on the nature of the envisaged solution, their ongoing insights and analysis should mitigate problems at this stage.

Mitigate, that is, but not eliminate. Difficulties can always arise. The major point of this exercise is that the problem isn’t solved, or even conceived, at the implementation stage. Ideally it is logically solved before you ever get that far. As long-time computer industry analyst Jeff Duntemann once observed (riffing on Ben Franklin, as I recall), “An ounce of analysis is worth a pound of debugging.”

Test and Acceptance

Testing is meant to tell you whether the thing you built works, and whether it is the right thing to have built (to solve the identified problem and realize the sought-after value). These two operations are formally known as verification and validation.

A form of this V&V happens every time you iterate within a phase while you are working toward the most correct and complete understanding. For example, when you perform discovery and data collection in the conceptual modeling phase, you document your findings, have the subject matter experts review your documentation and tell you what you got wrong, and you make the necessary edits and resubmit for review. This process continues until the SMEs confirm that your documentation, and hence understanding (of the current state, and potentially other solution components) is complete and correct.

A different form of it occurs when you iterate back and forth between phases. You identify gaps that need to be filled and modifications that need to be made in order for the whole to make logical and consistent sense.

The main V&V operations are performed on the implemented items. Verification operations tend to be more concrete and definable and the tests to perform them tend to be more amenable to automation. Validation operations tend to be more abstract and require expert judgment. Completion of all testing leads to a determination of non-acceptance, partial acceptance (with limitations), or full acceptance. Obviously, final validation and acceptance are unlikely to be completed if the problem has not been solved using a consistent process that considers all relevant factors.

Conclusion

Do the proper analysis, through all the steps, in order, at a weight and level of effort appropriate for the problem, and that will give you the best chance to succeed. Jumping in and just implementing something is not likely to yield good results.