Root cause analysis is the process of digging into a problem in order to find out what actually caused it. In simple systems or situations this process is pretty straightforward, but of course we often work in situations that are far more complex. When that happens we need a more robust way to figure out what’s going on and address it.

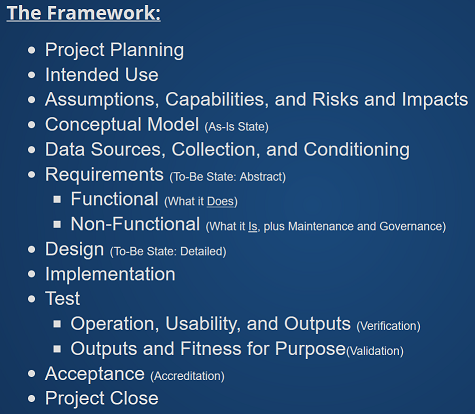

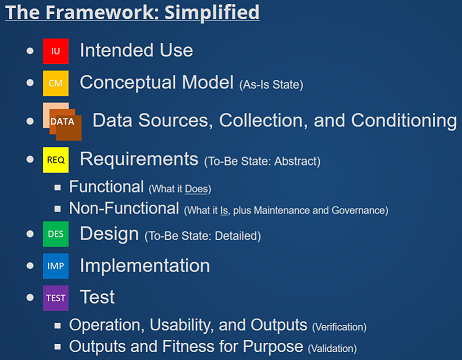

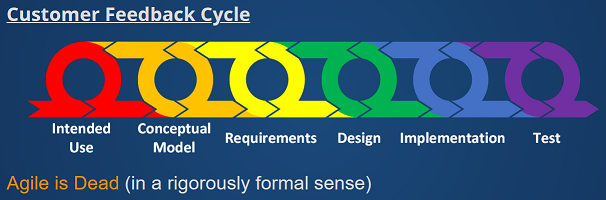

The BABOK advances the following general procedure for doing this work. Sharp-eyed readers may note similarities to the steps in my framework, and also to the scientific method.

- Problem statement definition: This identifies the symptoms of the problem, or at least the effects in some way.

- Data Collection: This is where we gather all the information we can about the system in which the problem occurred.

- Cause Identification: This identifies candidates for the ultimate cause of the problem, potentially from many possibilities.

- Action Identification: This describes the corrective actions to take to correct the problem, and ideally do some things to prevent its recurrence.

The BABOK lists two main methods of performing root cause analysis.

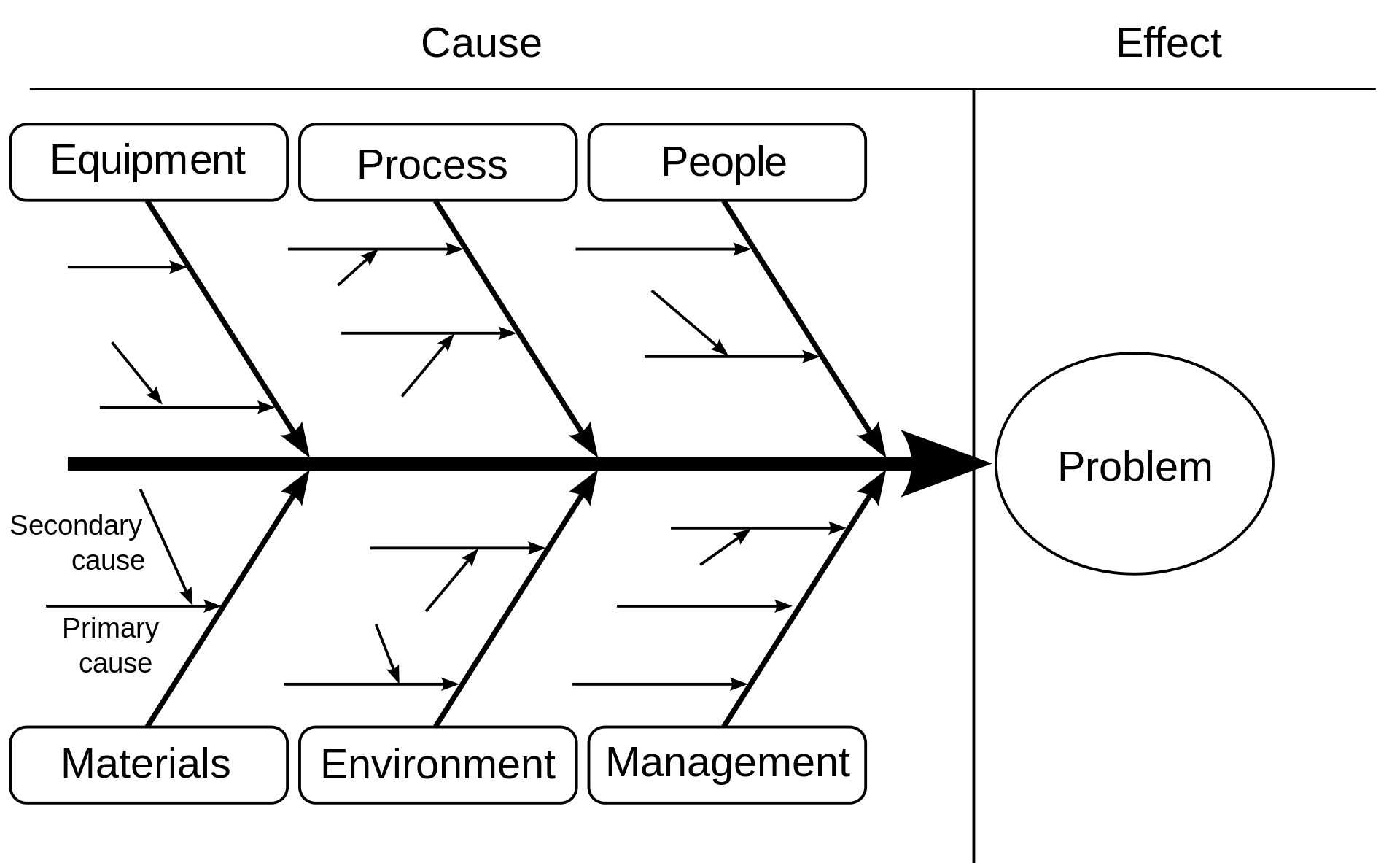

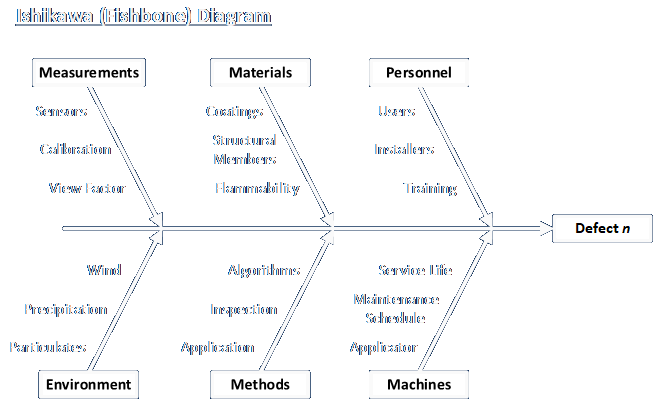

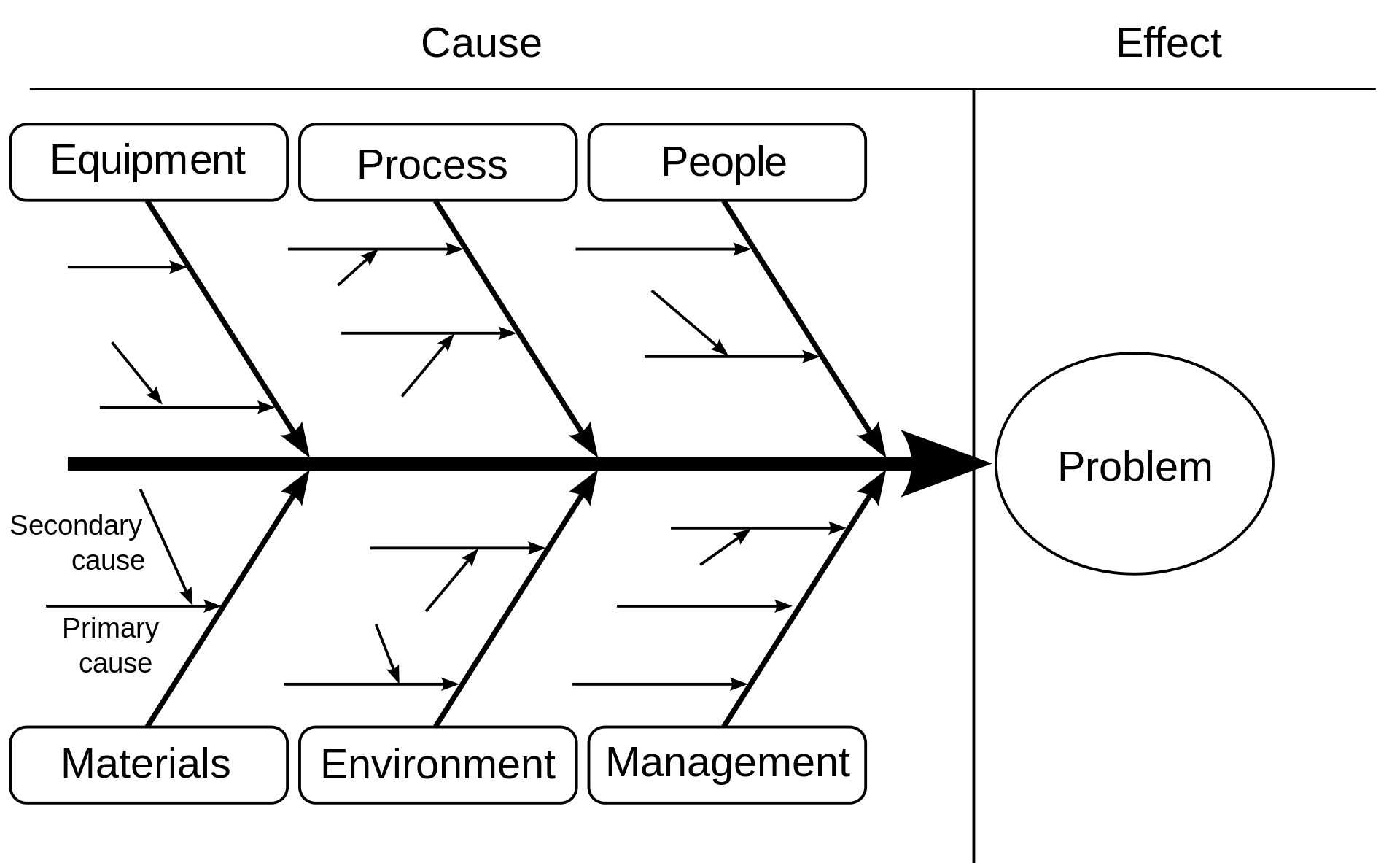

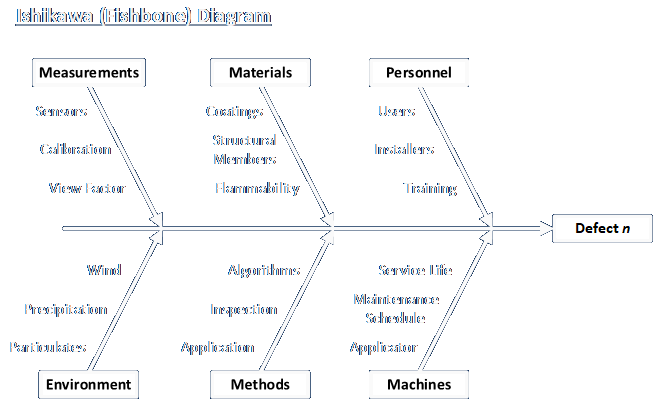

Fishbone Diagram

The fisbone diagram, also referred to as an Ishikawa diagram, is a graphical method of structuring potential contributing factors.

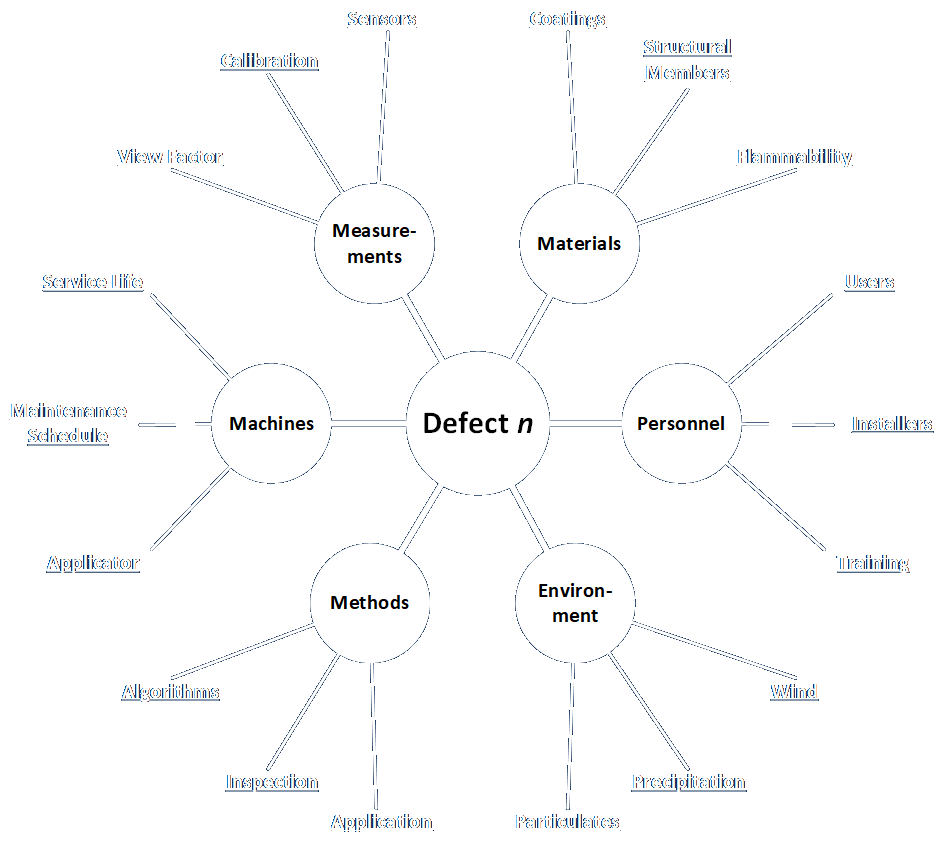

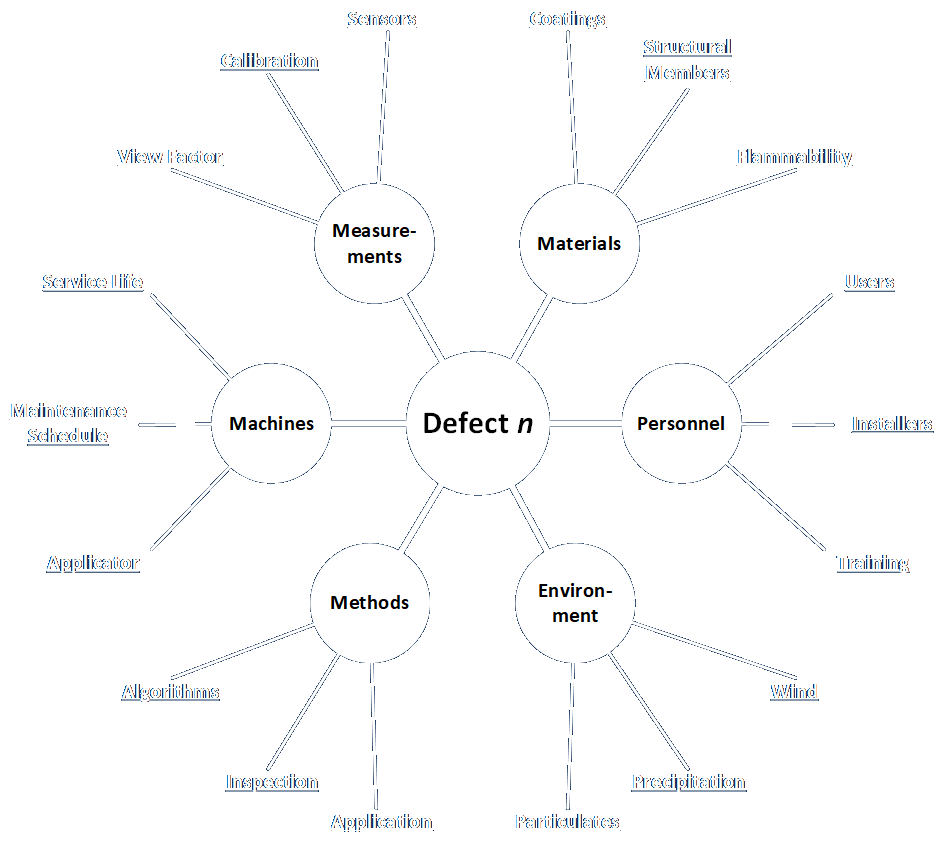

The diagram can even be drawn as a Mind Map like this:

Each form of the diagram allows investigators to identify primary, secondary, and even tertiary contributing factors, as shown in the upper version. The primary branches of the diagram can take on any labels, and there can be any number of them, but it used to be common to label the main branches as the Six Ms as follows: Measurement, Methods, Mother Nature, Machine, Materials, and Man. The preferred way of doing this now is to use the generic terms People and Environment for Man and Mother Nature, respectively. This heuristic is simply a way to inspire investigators to consider a wide range of contributing factors.

The Five Whys

Another common method for digging into problems is to keep asking “Why?” until you drill through enough clues and connections to find the root cause of a problem. (Now why do I suddenly have the lyrics for “Dem Bones” running through my head?) The point of this method is to not be satisfied with answers that are, per H.L. Mencken, “clear, simple, and wrong.”

I embarrassingly failed to apply this technique when I was trying to diagnose a problem with a steel reheat furnace in Thailand, as I describe near the bottom of this article. The overall process I describe there tends to go wide, where the Five Whys technique is meant to go deep. However, if you don’t identify the right thread to start with, no amount of pulling on the ones you do identify is likely to lead you to the right source. Therefore I recommend a combination of the two approaches (wide and deep).

I will further opine that most people are not inclined to follow long chains of logic. Of those that can do so, many can only to it in certain (professional and interpersonal) contexts. As you develop this skill, don’t be bashful about getting other people to help. Ask them, “What am I missing?” “What do you know that I don’t know?” If you get enough people with enough different points of view thinking and communicating, you’re more likely to find what you’re looking for. You will also build trust and cooperation, and learn how to dig into problems in new ways.

Other Contexts for Root Cause Analysis

Remembering that business analysis can be used to build, modify, and improve environments as well as process and products, I want to share some things I learned during my Six Sigma training.

Top Five Reasons for Project Failure

- No stakeholder buy-in: If important participants are not invested in the outcome and won’t take the time and energy to contribute to the effort, you are likely to be short on resources and insight needed for success.

- No champion involvement: This is similar to the situation above, except this usually involves a senior manager starving the effort of resource or otherwise blocking progress. I’ve heard of executives running entire teams to make people happy, but with no intention of letting them actually change anything.

- No root causation: If you don’t identify the right problem, you are unlikely to actually solve it.

- Scope Creep: This involves agreeing to include too many extras in a project, resulting in not having sufficient resources to do the intended work or solve the intended problem completely.

- Poor Team Dynamics: If the members of the team do not communicate, cooperate, or support each other, the team is unlikely to realize much success. This is the biggest killer of projects.

Start General, Then Get Specific

I’m not going into detail on this one. This outlined approach was taken from my abbreviated study notes. It is a restatement of things I’ve written and said here and elsewhere.

Open – Generate Maximum Suspects

Macro problem

Micro problem statement

Brainstorm

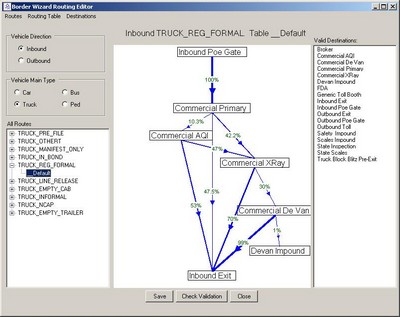

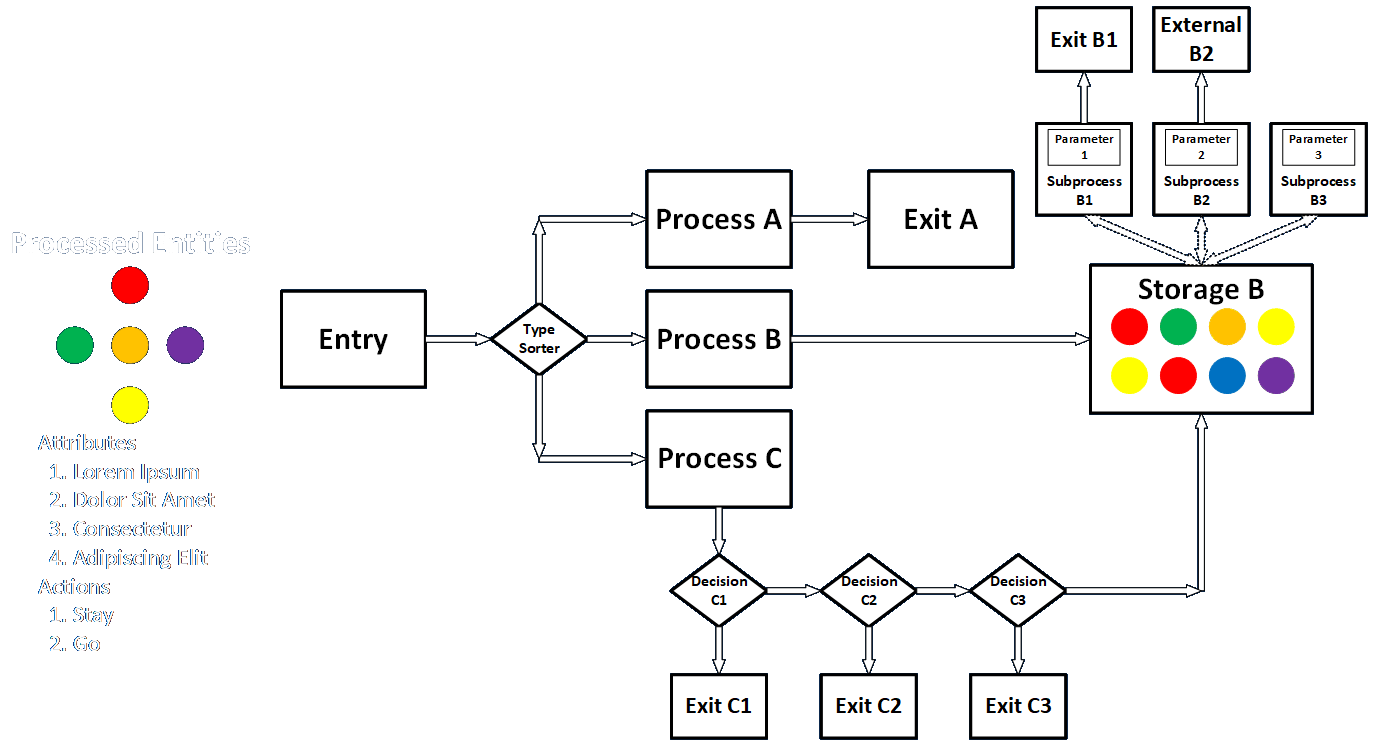

Cause-and-effect diagram

Narrow – Clarify, Remove Duplicates, Narrow

multi-vote to narrow list, not a decision-making tool

Close – Test Hypotheses

1. Do nothing

2. I said so

3. Basic data collection

4. Scatter Analysis or Regression

5. Design of Experiments

Reactive vs. Proactive

Root cause analysis is usually contemplated in terms of figuring out what went wrong after is happens. In other words, the typical approach is reactive. By contrast, a proactive approach is also possible, and attempts to examine all aspects of any design to prevent, reduce, mitigate, or recover from failures before they happen. One method of doing this is called FMEA, for Failure Modes and Effect Analysis. You should be aware of this, though it isn’t covered in the BABOK. I invite you to check out the Wikipedia article for a brief overview. Note that this method is in keeping with my oft-repeated admonition to be thorough and examine problems from every possible angle.