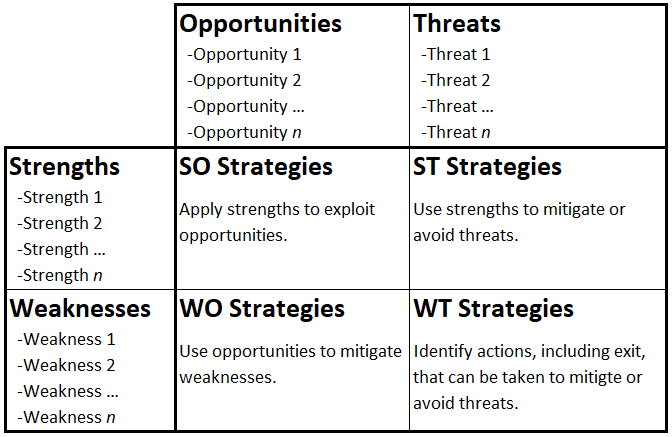

Business capability analysis is the process of evaluating a many aspects of a business’s ability to accomplish defined goals and support and develop its staff and customers. It seeks to create a consistent rubric for conducting repeatable analyses that allow participants to compare businesses, analyze strengths and weaknesses, and identify and effect improvements.

The analysis process involves the creation of capability maps. The figure below shows what the relevant areas of evaluation are and how they might be scored.

The figure illustrates that the analysis involves value to the customer and to the business, identified performance gaps, and different kinds of risks. Since there are so many possible considerations to analyze, as will become apparent, the technique keeps the scoring simple. There’s not much point in trying to define the difference between a six and a seven on some arbitrary scale (much less a 67 versus a 73), so high, medium, and low is more than sufficient to guide effort to obvious areas of improvement.

Risk can be analyzed with respect to the business (its market functions, reputation, relationships, and financial status), technology, the organization (its people, environment, and function), and the market (the overall state of that industry or segment). See further discussions of risk here.

An organization’s capabilities can be identified and examined based on any breakdown of operations and capabilities. They should be relevant to what the organization does and places emphasis on. When comparing organizations, it’s a good idea to do so using comparable hierarchical breakdowns.

The BABOK’s examples provide some solid guidance in this regard. It identifies major areas of inquiry including the organization, the ability to conduct project analysis, professional development, and the quality and structure of management. Those areas can all be broken down into sub-considerations. Under organizational analysis, we might consider capability analysis, root cause analysis, process analysis, stakeholder analysis, and roadmap construction. Note that these examples are all rather BABOK-y, which is fine, but do not feel bound by these specific areas of examination. Use ones that work for your specific situation. While it’s always good to be able to have an internal analysis capability, and the BABOK provides a great roadmap for it, sometimes you need to be more specific. If you run a distribution business, for example, you might examine your tracking, scheduling, routing, and dispatching capabilities.

Finally, you can create grids that show all the high-level areas of inquiry on one axis and their sub-areas on another, so a high-level overview of a wide variety of activities can be had at a glance. Some patterns may be obvious, and sometimes you might just want to address individual pain points.

I say quite often that I have never used the technique, though it strikes me as being valid and I can clearly see the utility. I have done the equivalent analyses in a lot of different ways. Like many of the fifty BABOK techniques, it’s a place to start, an organized and consistent way of doing something. It may or may not be the right way for you to approach any particular analysis, but it’s good to be aware of it. Someone thought enough of it to create it and find it and derive value, and it might turn out to be the exact thing you need!

Finally, note that you should not be bound by drawing out the situation for each major and sub-area of concern as shown in the figure above. Use any method of expression that works. You can just as easily create a spreadsheet or table that shows the relevant scores in different grid boxes (an X or solid shading in the relevant H/M/L column), by letter (H, M, or L) or word (High, Medium, or Low). Any of those can be color coded. Use what works for you and in any way your customers and colleagues can understand.