Every customer you work with will have an interesting variation in culture, and it’s good to get to know what they are. The differences may be merely interesting, may be motivating, or may be important to how you relate to them.

An early customer I worked with on a business process reengineering effort acknowledged that a certain amount of “slippage” was considered normal in the course of a workday. We were measuring the type, number, and duration of different activities in each type of employee’s work day and expected the total amount of process-related effort to total something less than 480 minutes (or less than eight full hours). Management understood that people needed time for administrative and personal activities to some extent and, since the employees’ activities could be readily quantified on an objective basis, the company had a good handle on what constituted acceptable performance. I’ve heard rougher stories about how employees can be treated in environments that are relentlessly driven by performance metrics, but this company had a good attitude and appeared to support the well-being of their employees. They proved to be easy to work with in the procedural sense, though they were difficult to work with in other ways.

One of the more interesting and motivating traditions I ever encountered was when I worked with the Royal School of Artillery at its home base at Larkhill, just a couple of miles from Stonehenge (I got up and ran there every other day). The location also figured in the plot of an interesting movie, though possibly only because I spent time there.

The Royal Artillery’s interesting tradition is that the artillery pieces themselves (cannons in the old days and everything from howitzers to air defense missiles today) nominally serve as the unit colors, in place of a formal flag or guidon. Even better, you were supposed to show respect to the equipment by always running toward it and always walking away from it. My opinion on such things has changed over they years but this remains a fascinating piece of psychology.

Industrial environments are shaded by companies’ approaches to market competition, safety, and location, among other things. I’ve been at a couple of steel mills where employees have been killed, in one case while I was working there. Management’s reaction has ranged from encouraging employees to nap during slow times to ensure they are fresh when things need to happen, to reviewing safety procedures and working to educate the employees further. Those efforts were of course in addition to trying to figure out exactly what went wrong in the first place. It is recognized that locations like caster decks are inherently dangerous, as is molten steel under any conditions.

Some companies run on a bureaucratic basis where employees may get paid the same no matter how the business performs, in which case the employees generally move at the same speed and level of effort no matter what may be going on. In companies where employees are incentivized based on a lower base wage but bonuses based on production and sales the employees from top to bottom are generally far more proactive and aggressive in their efforts to keep a plant running at top speed and fixing problems as soon as they occur.

Managers in bureaucratic environments are often more laid back in their approach to getting things done and my be more demanding in terms of documentation and ease of use, while managers in more aggressive and entrepreneurial environments will demand more features and quicker completion, if possible. Ideally you, as a vendor, will always try to provide the best possible product along every axis, but I know it can be tempting to hold out on customers who are troublesome and uncooperative. You may also be tempted to get out as soon as you meet the local manager’s minimum requirements.

In some ways the more adept managers and employees you meet in the field will be able to deal with lesser deliverables in terms of polish and completeness, since they will be able to bring more understanding and interaction to their end of the process. That actually does a disservice to the better workers and companies, who should get the best of what you have to offer. Over time, of course, you should be providing the best product and service you can by leveraging your own experience and the feedback you get from customers at all levels. Ask them for feedback and listen to what they tell you. Not every idea may be a winner but they’ll come up with a lot of things you won’t think of.

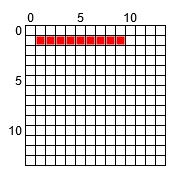

“butt”

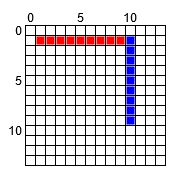

“butt” “round”

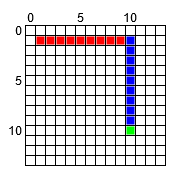

“round” “square”

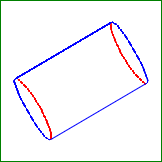

“square”