//schedule for seven hours of arrivals in half-hour blocks

var arrivalSchedule = [0, 1, 2, 6, 7, 7, 8, 9, 7, 6, 4, 2, 1, 0];

var entryDistribution = [[1.0], [1.0], [1.0], [1.0], [1.0], [1.0], [1.0], [1.0], [1.0], [1.0], [1.0], [1.0], [1.0], [1.0]];

var arrival1 = new ArrivalsComponent(30.0, arrivalSchedule, entryDistribution);

arrival1.defineDataGroup(2.0, 61, 2, 70, globalNeutralColor, globalValueColor, globalLabelColor); //leave in place

var tempGraphic;

var routingTable = [[1.0],[1.0],[1.0]];

var entry1 = new EntryComponent(routingTable);

entry1.defineDataGroup(2.0, 90, 105, 80, globalNeutralColor, globalValueColor, globalLabelColor); //displayDelay, x,y,valuewidth,border,value,label

entry1.setRoutingMethod(3); //1 single connection, 2 distribution logic, 3 model logic

entry1.setComponentName("Entry1");

tempGraphic = new DisplayElement(entry1, 100, 5, 68, 20, 0.0, false, false, []);

entry1.defineGraphic(tempGraphic);

arrival1.assignNextComponent(entry1);

var pathE1Q0A = new PathComponent();

pathE1Q0A.setSpeedTime(10.0, 1.0);

tempGraphic = new DisplayElement(pathE1Q0A, 0, 0, 160, 37, 0.0, false, false, []);

pathE1Q0A.defineGraphic(tempGraphic);

entry1.assignNextComponent(pathE1Q0A);

var pathE1Q0B = new PathComponent();

pathE1Q0B.setSpeedTime(10.0, 1.0);

tempGraphic = new DisplayElement(pathE1Q0B, 160, 37, 0, 0, 0.0, false, false, []);

pathE1Q0B.defineGraphic(tempGraphic);

var routingTableQ0 = [[1.0],[1.0],[1.0]]; //not actually needed since routingMethod is 2 here

var queue0 = new QueueComponent(0.0, Infinity, routingTableQ0);

queue0.defineDataGroup(2.0, 90, 205, 80, globalNeutralColor, globalValueColor, globalLabelColor);

queue0.setRoutingMethod(2); //1 single connection, 2 distribution logic, 3 model logic

queue0.setComponentName("Q0");

queue0.setComponentGroup("Primary");

tempGraphic = new DisplayElement(queue0, 90, 50, 88, 56, 0.0, false, false, []);

var tgBaseY = 25; //49;

tempGraphic.addXYLoc(44, tgBaseY + 24);

tempGraphic.addXYLoc(32, tgBaseY + 24);

tempGraphic.addXYLoc(20, tgBaseY + 24);

tempGraphic.addXYLoc(8, tgBaseY + 24);

tempGraphic.addXYLoc(8, tgBaseY + 12);

tempGraphic.addXYLoc(20, tgBaseY + 12);

tempGraphic.addXYLoc(32, tgBaseY + 12);

tempGraphic.addXYLoc(44, tgBaseY + 12);

tempGraphic.addXYLoc(56, tgBaseY + 12);

tempGraphic.addXYLoc(68, tgBaseY + 12);

tempGraphic.addXYLoc(80, tgBaseY + 12);

tempGraphic.addXYLoc(80, tgBaseY);

tempGraphic.addXYLoc(68, tgBaseY);

tempGraphic.addXYLoc(56, tgBaseY);

tempGraphic.addXYLoc(44, tgBaseY);

tempGraphic.addXYLoc(32, tgBaseY);

tempGraphic.addXYLoc(20, tgBaseY);

tempGraphic.addXYLoc(8, tgBaseY);

queue0.defineGraphic(tempGraphic);

pathE1Q0B.assignNextComponent(queue0);

pathE1Q0A.assignNextComponent(pathE1Q0B);

var pathQ0Q1A = new PathComponent();

pathQ0Q1A.setSpeedTime(20, 1.0);

tempGraphic = new DisplayElement(pathQ0Q1A, 0, 0, 20, 118, 0.0, false, false, []);

pathQ0Q1A.defineGraphic(tempGraphic);

queue0.assignNextComponent(pathQ0Q1A);

var pathQ0Q1B = new PathComponent();

pathQ0Q1B.setSpeedTime(20, 1.0);

tempGraphic = new DisplayElement(pathQ0Q1B, 20, 118, 0, 0, 0.0, false, false, []);

pathQ0Q1B.defineGraphic(tempGraphic);

var pathQ0Q2 = new PathComponent();

pathQ0Q2.setSpeedTime(20, 1.0);

tempGraphic = new DisplayElement(pathQ0Q2, 0, 0, 0, 0, 0.0, false, false, []);

pathQ0Q2.defineGraphic(tempGraphic);

queue0.assignNextComponent(pathQ0Q2);

var pathQ0Q3 = new PathComponent();

pathQ0Q3.setSpeedTime(20, 1.0);

tempGraphic = new DisplayElement(pathQ0Q3, 0, 0, 0, 0, 0.0, false, false, []);

pathQ0Q3.defineGraphic(tempGraphic);

queue0.assignNextComponent(pathQ0Q3);

var routingTableQ123 = [[1.0],[1.0],[1.0]]; //not actually needed since routingMethod is 1 here

var queue1 = new QueueComponent(3.0, 3, routingTableQ123);

queue1.defineDataGroup(2.0, 5, 341, 80, globalNeutralColor, globalValueColor, globalLabelColor);

queue1.setRoutingMethod(1); //1 single connection, 2 distribution logic, 3 model logic

queue1.setExclusive(true);

queue1.setComponentName("Q1");

queue1.setComponentGroup("Primary");

tempGraphic = new DisplayElement(queue1, 5, 131, 64, 36, 0.0, false, false, []);

tgBaseY = 29; //53

tempGraphic.addXYLoc(32, tgBaseY);

tempGraphic.addXYLoc(20, tgBaseY);

tempGraphic.addXYLoc(8, tgBaseY);

queue1.defineGraphic(tempGraphic);

pathQ0Q1B.assignNextComponent(queue1);

pathQ0Q1A.assignNextComponent(pathQ0Q1B);

var queue2 = new QueueComponent(3.0, 3, routingTableQ123);

queue2.defineDataGroup(2.0, 175, 341, 80, globalNeutralColor, globalValueColor, globalLabelColor);

queue2.setRoutingMethod(1); //1 single connection, 2 distribution logic, 3 model logic

queue2.setExclusive(true);

queue2.setComponentName("Q2");

queue2.setComponentGroup("Primary");

tempGraphic = new DisplayElement(queue2, 102, 131, 64, 36, 0.0, false, false, []);

tgBaseY = 29; //53;

tempGraphic.addXYLoc(32, tgBaseY);

tempGraphic.addXYLoc(20, tgBaseY);

tempGraphic.addXYLoc(8, tgBaseY);

queue2.defineGraphic(tempGraphic);

pathQ0Q2.assignNextComponent(queue2);

var queue3 = new QueueComponent(3.0, 3, routingTableQ123);

queue3.defineDataGroup(2.0, 175, 341, 80, globalNeutralColor, globalValueColor, globalLabelColor);

queue3.setRoutingMethod(1); //1 single connection, 2 distribution logic, 3 model logic

queue3.setExclusive(true);

queue3.setComponentName("Q3");

queue3.setComponentGroup("Primary");

tempGraphic = new DisplayElement(queue3, 199, 131, 64, 36, 0.0, false, false, []);

tgBaseY = 29; //53;

tempGraphic.addXYLoc(32, tgBaseY);

tempGraphic.addXYLoc(20, tgBaseY);

tempGraphic.addXYLoc(8, tgBaseY);

queue3.defineGraphic(tempGraphic);

pathQ0Q3.assignNextComponent(queue3);

var pathQ1P1 = new PathComponent();

pathQ1P1.setSpeedTime(20, 1.0);

tempGraphic = new DisplayElement(pathQ1P1, 0, 0, 0, 0, 0.0, false, false, []);

pathQ1P1.defineGraphic(tempGraphic);

queue1.assignNextComponent(pathQ1P1);

var pathQ2P2 = new PathComponent();

pathQ2P2.setSpeedTime(20, 1.0);

tempGraphic = new DisplayElement(pathQ2P2, 0, 0, 0, 0, 0.0, false, false, []);

pathQ2P2.defineGraphic(tempGraphic);

queue2.assignNextComponent(pathQ2P2);

var pathQ3P3 = new PathComponent();

pathQ3P3.setSpeedTime(20, 1.0);

tempGraphic = new DisplayElement(pathQ3P3, 0, 0, 0, 0, 0.0, false, false, []);

pathQ3P3.defineGraphic(tempGraphic);

queue3.assignNextComponent(pathQ3P3);

var routingTableP123 = [[0.92, 1.0],[0.85,1.0],[0.548,1.0]]; //citizen, LPR, visitor / exit, secondary

var processTimeP123 = [10.0,20.8,13.0]; //fast, slow visitor, slow citizen or LPR

var process1 = new ProcessComponent(processTimeP123, 1, routingTableP123);

process1.defineDataGroup(2.0, 5, 477, 80, globalNeutralColor, globalValueColor, globalLabelColor);

process1.setExclusive(true);

process1.setRoutingMethod(3); //1 single connection, 2 distribution logic, 3 model logic

process1.setComponentName("P1");

process1.setComponentGroup("Primary");

tempGraphic = new DisplayElement(process1, 5, 192, 64, 36, 0.0, false, false, []);

tgBaseY = 29; //53;

tempGraphic.addXYLoc(32, tgBaseY);

process1.defineGraphic(tempGraphic);

pathQ1P1.assignNextComponent(process1);

var process2 = new ProcessComponent(processTimeP123, 1, routingTableP123);

process2.defineDataGroup(2.0, 175, 477, 80, globalNeutralColor, globalValueColor, globalLabelColor);

process2.setExclusive(true);

process2.setRoutingMethod(3); //1 single connection, 2 distribution logic, 3 model logic

process2.setComponentName("P2");

process2.setComponentGroup("Primary");

tempGraphic = new DisplayElement(process2, 102, 192, 64, 36, 0.0, false, false, []);

tgBaseY = 29; //53;

tempGraphic.addXYLoc(32, tgBaseY);

process2.defineGraphic(tempGraphic);

pathQ2P2.assignNextComponent(process2);

var process3 = new ProcessComponent(processTimeP123, 1, routingTableP123);

process3.defineDataGroup(2.0, 175, 477, 80, globalNeutralColor, globalValueColor, globalLabelColor);

process3.setExclusive(true);

process3.setRoutingMethod(3); //1 single connection, 2 distribution logic, 3 model logic

process3.setComponentName("P3");

process3.setComponentGroup("Primary");

tempGraphic = new DisplayElement(process3, 199, 192, 64, 36, 0.0, false, false, []);

tgBaseY = 29; //53;

tempGraphic.addXYLoc(32, tgBaseY);

process3.defineGraphic(tempGraphic);

pathQ3P3.assignNextComponent(process3);

var pathP1X1 = new PathComponent();

pathP1X1.setSpeedTime(30, 1.0);

tempGraphic = new DisplayElement(pathP1X1, 0, 0, 0, 0, 0.0, false, false, []);

pathP1X1.defineGraphic(tempGraphic);

process1.assignNextComponent(pathP1X1);

var pathP2X1 = new PathComponent();

pathP2X1.setSpeedTime(30, 1.0);

tempGraphic = new DisplayElement(pathP2X1, 0, 0, 0, 0, 0.0, false, false, []);

pathP2X1.defineGraphic(tempGraphic);

process2.assignNextComponent(pathP2X1);

var pathP3X1 = new PathComponent();

pathP3X1.setSpeedTime(30, 1.0);

tempGraphic = new DisplayElement(pathP3X1, 0, 0, 0, 0, 0.0, false, false, []);

pathP3X1.defineGraphic(tempGraphic);

process3.assignNextComponent(pathP3X1);

var exit1 = new ExitComponent();

exit1.defineDataGroup(2.0, 90, 576, 80, globalNeutralColor, globalValueColor, globalLabelColor);

exit1.setComponentName("Exit1");

tempGraphic = new DisplayElement(exit1, 100, 383, 68, 20, 0.0, false, false, []);

exit1.defineGraphic(tempGraphic);

pathP1X1.assignNextComponent(exit1);

pathP2X1.assignNextComponent(exit1);

pathP3X1.assignNextComponent(exit1);

var pathP1Q10 = new PathComponent();

pathP1Q10.setSpeedTime(20, 1.0);

tempGraphic = new DisplayElement(pathP1Q10, 0, 0, 0, 0, 0.0, false, false, []);

pathP1Q10.defineGraphic(tempGraphic);

process1.assignNextComponent(pathP1Q10);

var pathP2Q10 = new PathComponent();

pathP2Q10.setSpeedTime(20, 1.0);

tempGraphic = new DisplayElement(pathP2Q10, 0, 0, 0, 0, 0.0, false, false, []);

pathP2Q10.defineGraphic(tempGraphic);

process2.assignNextComponent(pathP2Q10);

var pathP3Q10 = new PathComponent();

pathP3Q10.setSpeedTime(20, 1.0);

tempGraphic = new DisplayElement(pathP3Q10, 0, 0, 0, 0, 0.0, false, false, []);

pathP3Q10.defineGraphic(tempGraphic);

process3.assignNextComponent(pathP3Q10);

var routingTableQ10 = [[1.0],[1.0],[1.0]]; //not actually needed since routingMethod is 2 here

var queue10 = new QueueComponent(3.0, Infinity, routingTableQ10);

queue10.defineDataGroup(2.0, 230, 353, 80, globalNeutralColor, globalValueColor, globalLabelColor);

queue10.setRoutingMethod(2); //1 single connection, 2 distribution logic, 3 model logic

queue10.setExclusive(false);

queue10.setComponentName("Q10");

queue10.setComponentGroup("Secondary");

tempGraphic = new DisplayElement(queue10, 230, 253, 64, 48, 0.0, false, false, []);

tgBaseY = 29; //53

tempGraphic.addXYLoc(32, tgBaseY + 12);

tempGraphic.addXYLoc(20, tgBaseY + 12);

tempGraphic.addXYLoc(8, tgBaseY + 12);

tempGraphic.addXYLoc(8, tgBaseY);

tempGraphic.addXYLoc(20, tgBaseY);

tempGraphic.addXYLoc(32, tgBaseY);

tempGraphic.addXYLoc(44, tgBaseY);

tempGraphic.addXYLoc(56, tgBaseY);

queue10.defineGraphic(tempGraphic);

pathP1Q10.assignNextComponent(queue10);

pathP2Q10.assignNextComponent(queue10);

pathP3Q10.assignNextComponent(queue10);

var pathQ10P10 = new PathComponent();

pathQ10P10.setSpeedTime(20, 1.0);

tempGraphic = new DisplayElement(pathQ10P10, 0, 0, 0, 0, 0.0, false, false, []);

pathQ10P10.defineGraphic(tempGraphic);

queue10.assignNextComponent(pathQ10P10);

var pathQ10P11 = new PathComponent();

pathQ10P11.setSpeedTime(20, 1.0);

tempGraphic = new DisplayElement(pathQ10P11, 0, 0, 0, 0, 0.0, false, false, []);

pathQ10P11.defineGraphic(tempGraphic);

queue10.assignNextComponent(pathQ10P11);

var routingTableP1011 = [[0.8,1.0],[0.8,1.0],[0.8, 1.0]];

var processTimeP1011 = [40.0,40.0,40.0];

var process10 = new ProcessComponent(processTimeP1011, 1, routingTableP1011);

process10.defineDataGroup(2.0, 5, 477, 80, globalNeutralColor, globalValueColor, globalLabelColor);

process10.setExclusive(true);

process10.setRoutingMethod(3); //1 single connection, 2 distribution logic, 3 model logic

process10.setComponentName("P10");

process10.setComponentGroup("Secondary");

tempGraphic = new DisplayElement(process10, 190, 326, 64, 36, 0.0, false, false, []);

tgBaseY = 29; //53;

tempGraphic.addXYLoc(32, tgBaseY);

process10.defineGraphic(tempGraphic);

pathQ10P10.assignNextComponent(process10);

var pathP10X1 = new PathComponent();

pathP10X1.setSpeedTime(20, 1.0);

tempGraphic = new DisplayElement(pathP10X1, 0, 0, 0, 0, 0.0, false, false, []);

pathP10X1.defineGraphic(tempGraphic);

process10.assignNextComponent(pathP10X1);

pathP10X1.assignNextComponent(exit1);

var process11 = new ProcessComponent(processTimeP1011, 1, routingTableP1011);

process11.defineDataGroup(2.0, 5, 477, 80, globalNeutralColor, globalValueColor, globalLabelColor);

process11.setExclusive(true);

process11.setRoutingMethod(3); //1 single connection, 2 distribution logic, 3 model logic

process11.setComponentName("P11");

process11.setComponentGroup("Secondary");

tempGraphic = new DisplayElement(process11, 266, 326, 64, 36, 0.0, false, false, []);

tgBaseY = 29; //53;

tempGraphic.addXYLoc(32, tgBaseY);

process11.defineGraphic(tempGraphic);

pathQ10P11.assignNextComponent(process11);

var pathP11X1 = new PathComponent();

pathP11X1.setSpeedTime(20, 1.0);

tempGraphic = new DisplayElement(pathP11X1, 0, 0, 0, 0, 0.0, false, false, []);

pathP11X1.defineGraphic(tempGraphic);

process11.assignNextComponent(pathP11X1);

pathP11X1.assignNextComponent(exit1);

var pathP10X2 = new PathComponent();

pathP10X2.setSpeedTime(20, 1.0);

tempGraphic = new DisplayElement(pathP10X2, 0, 0, 0, 0, 0.0, false, false, []);

pathP10X2.defineGraphic(tempGraphic);

process10.assignNextComponent(pathP10X2);

var pathP11X2 = new PathComponent();

pathP11X2.setSpeedTime(20, 1.0);

tempGraphic = new DisplayElement(pathP11X2, 0, 0, 0, 0, 0.0, false, false, []);

pathP11X2.defineGraphic(tempGraphic);

process11.assignNextComponent(pathP11X2);

var exit2 = new ExitComponent();

exit2.defineDataGroup(2.0, 230, 576, 80, globalNeutralColor, globalValueColor, globalLabelColor);

exit2.setComponentName("Exit2");

tempGraphic = new DisplayElement(exit2, 230, 383, 68, 20, 0.0, false, false, []);

exit2.defineGraphic(tempGraphic);

pathP10X2.assignNextComponent(exit2);

pathP11X2.assignNextComponent(exit2);

addToGroupStatsNameListWrapper("System");

function initGraphics3DEntities() {

for (var i = 0; i < global3DMaxEntityCount; i++) {

define3DEntity(10000, 10000, 5, global3DEntityHeight, 8, globalReadyColor, globalReadyVertexColor);

}

normalize3DEntities(0); //parameter is starting index

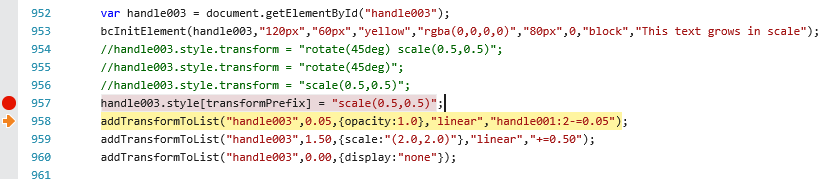

}