Simulation can be used for many different purposes, and I wanted to describe a few of them in detail. I pay special attention to the ones I’ve actually worked with during my career. Note that these ideas inevitably overlap to some degree.

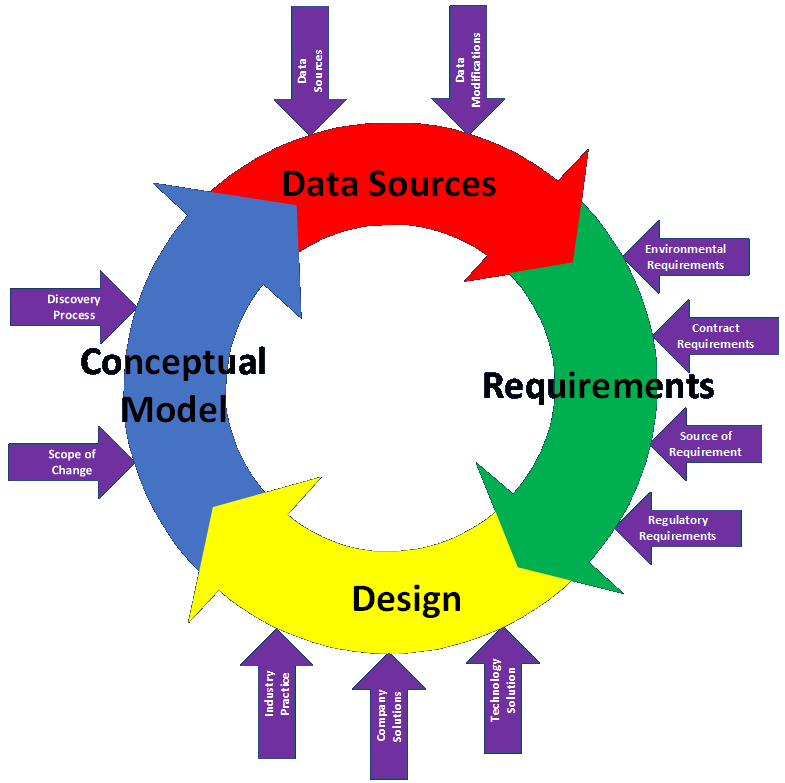

Design and Sizing: Simulation can be used to evaluate the behavior of a system before it’s built. This allows designers to head off costly mistakes in the design stage rather than having to fix problems identified in a working system. There are two main aspects of a system that will typically be evaluated.

Behavior describes how a system works and how all the components and entities interact. This might not be a big deal for typical or steady-state operations but it can be very important when there are many variations and interactions and when systems are extremely complex. I’ve done this for many different applications, including iteratively calculating the concentration of chemicals in a pulp-making process, analyzing layouts for land border crossings, and examining the queuing, heating, and delay behavior of steel reheat furnaces.

Sizing a system involves ensuring that it can handle the desired throughput. For continuous or fluid-based systems this may involve determining the diameter of pipes and this size of tanks and chests meant to store material as buffers. For a discrete system like a border crossing there has to be enough space to move and queue.

The number of parallel operations for certain process stages needs to be determined for all systems. For example, if a pulp mill requires a cleaning stage and the overall flow is 10,000 gallons per minute but the capacity of each cleaner is only 100 gallons per minute then you’ll need a bank of at least 100 cleaners. That’s a calculation you can do without simulation, pr se, but other situations are more complex.

If an inspection process needs to have a waiting period of no longer than thirty minutes and the average inspection time is two minutes (but may vary between 45 seconds and 20 minutes) and there are 30 arrivals per hour, then how many inspection booths do you need? There’s not actually enough information to know. The design flow in a paper mill can be known but the arrival rate at a border crossing may vary by time of day, time of year, weather, special events, the state of the economy, and who knows how many other reasons. The size of a queue that builds up over time is based on the number of arrivals exceeding the inspection rate over a period of time. That’s not something you can predict in a deterministic way, which is why Monte Carlo techniques are used.

It’s also why performance standards (also known as KPIs or MOEs for Key Performance Indicators or Measures of Effectiveness) are expressed with a degree of uncertainty. The performance standard for a border crossing might actually be set as thirty minutes or less 85% of the time.

Operations Research is sometimes also known as tradespace analysis (see the first definition here), in that it attempts to analyze the effect of changing multiple, tightly linked, interacting processes. When I did this for aircraft maintenance logistics we included the effects of reliability, supply quantities and replenishment times, staff levels, scheduled and unscheduled maintenance procedures, and operational tempo. That particular simulation was written in GPSS/H and is said to be the most complex model ever executed in that language.

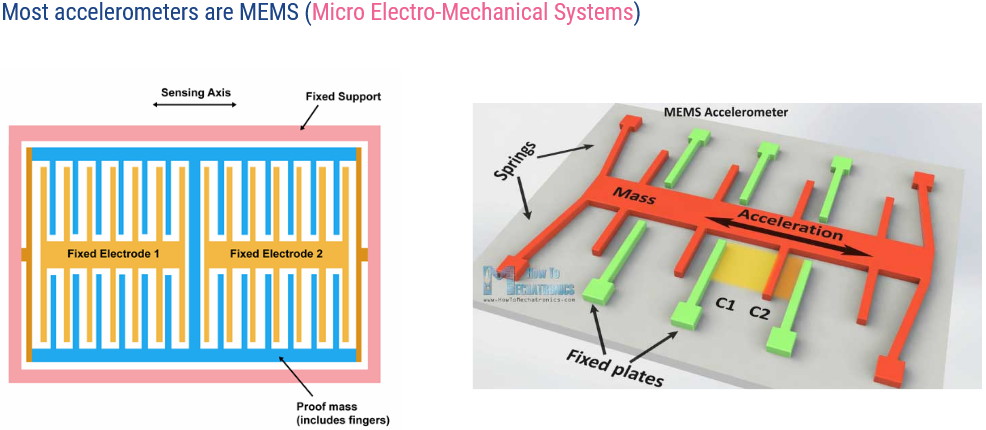

Real-Time Control systems take actions to ensure that a measured quantity, like the temperature in your house, stays as close as possible to a target or setpoint value, like the setting on your thermostat. In this example we say the system is controlling for temperature and that temperature is the control variable. In most cases the control variable can be measured directly, in which case you just need a feedback and control loop that looks at the value read by a mechanical or electrical sensor. In some cases, though, the control variable cannot be measured directly, in which case the control value or values have to be calculated using a simulation.

I did this for industrial furnace control systems using combustion heating processes and induction heating processes. Simulation was necessary for two reasons in these systems. One is that the temperature inside a piece of metal cannot be measured easily or cost-effectively in a mass-production environment, so the internal and external temperatures through each workpiece were calculated based on known information, including the furnace temperature, view factors, thermal properties of the materials including conductivity and heat capacity (which themselves changed with temperature), dimensions and mass density of the metal, and radiative heat transfer coefficients. The temperature was calculated for between seven and 147 points (nodes) along the surface and through the interior of each piece depending on the geometry of the piece and the furnace. This allows for calculation of both the average temperature of a piece and the differential temperature of a piece (highest minus lowest temperature). The system might be set to heat the steel to 2250 degrees Fahrenheit on average with a maximum differential temperature of 20 degrees. This was done so each piece was known to be thoroughly heated inside and outside before being sent to the rolling mill.

Training using simulation comes in many forms.

Operator training involves interacting with a piece of equipment or a system like an airplane or a nuclear power plant. I trained on two different kinds of air defense artillery simulators in the Army (the Roland and the British Tracked Rapier). More interestingly I researched, designed, and implemented thermohydraulic models for full-scope nuclear power plant training simulators for the Westinghouse Nuclear Simulator Division. These involved building a full-scale mockup of every panel, screen, button, dial, light, switch, alarm, recorder, and meter in the plant control room. Instead of being connected to a live plant it was connected to a simulation of every piece of equipment that affected or was affected by an item the operators could see or touch. The simulation included the operation of equipment like valves, pumps, sensors, and control and safety circuits; fluid models that simulated flow, heat transfer, and state changes; and electrical models that simulated power, generators, and bus conditions.

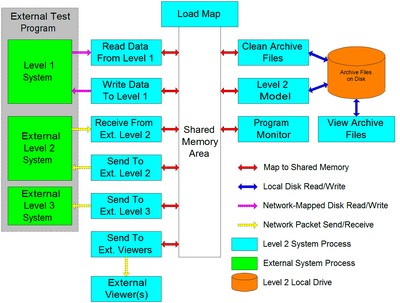

Participatory training involves moving through an environment, often with other participants. One company I worked with built evacuation simulations which were later modified to be incorporated into multi-player training systems for event security and emergency response. I defined the system and behavior characteristics that needed to be included and designed the screen controls that allowed users to set and modify the parameters. I also wrote real-time control and communication modules to allow our systems to communicate and integrate with partner systems in a distributed environment.

Risk Analysis can be performed using simulations combined with Monte Carlo techniques. This provides a range of results across multiple runs rather than a single or point result, and allows analysis of how often certain events occur relative to a desired threshold, expressed as a percentage. I’ve done this as part of the aircraft support logistics simulation I described above.

Economic Analysis may be carried out by adding cost factors to all relevant activities, multiplying them by number of occurrences, and totaling everything up. Note that economic effects can only be calculated for processes that can truly be quantified. Human action in unbounded activities can never be accurately quantified, both because humans have an infinite number of choices and because it would be impossible to collect data if all possible activities could be identified, so simulation of unbounded economies and actors is not possible. Simulating the cost of a defined and limited activity like an inspection or manufacturing process is possible because the possible actions are limited, definable, and collectable. I built this feature directly into the system I created for building simulations of medical practices.

Interestingly, cost data can be hard to acquire. This is understandable in the case of external cost data but less so from other departments within the same organization. Government departments are notorious for protecting their “rice bowls.” Employee costs are another sensitive area. They can be coded or blinded in some way, for example by dividing all amounts by a set factor so relative costs may be discerned but not absolute costs. Spreadsheets containing occurrence counts with costs left blank can be provided to customers or managers to fill out and analyze on their own.

Impact Analysis involves the assessment of changes in outcomes resulting from changes to inputs or parameters. Many of the simulations I’ve worked with have been used in this way.

Process Improvement (including BPR) is based on assessing the impacts of changes that make a process better in terms of throughput, loss, error, accuracy, cost, resource usage, time, or capability.

Entertainment is a fairly obvious use. Think movies and video games.

Sales can also be driven by simulations, particularly for demonstrating benefits. Simulations can also show how things work in a visually, which can be impressive to people in certain situations.

Funny story: One company did a public demo of a 3D model of a border crossing. It was a nice model that included some random trees and buildings around the perimeter of the property for effect. Some of the notional buildings were houses that weren’t intended to be particularly accurate as far as design or placement. A lady viewing the demo said the whole thing was probably wrong because her house wasn’t the right color. She wouldn’t let it go.

You never know what some people will think is important.