The simulations I’ve written, designed, specified, and utilized have incorporated a number of different features. I found it interesting that I was able to describe them in opposing pairs.

Continuous vs. Discrete-Event

I’ve gone into detail about continuous and discrete-event simulations here and here, among other places, so I won’t go into major detail now. I will say that continuous simulations are based on differential equations intergrated stepwise over time. They tend to run using time steps of a constant size. If multiple events occur in a given time interval they are all processed at once during the next execution cycle. Discrete-event simulations process events one-by-one, in time order, and in intervals of any duration. They can also handle wait..until conditions. Hybrid architectures are also possible.

Interactive vs. Fire-And-Forget

Interactive simulations can accept inputs from users or external processes at any time. They can be paused and restarted, and can sometimes be run at multiples or fractions of the base speed. Examples of interactive simulations are training simulators, real-time process control simulations, and video games.

Non-Interactive or Fire-and-Forget simulations typically run at one hundred percent duty cycle for the fastest possible completion. This type of simulation is generally used for design or analysis.

Real-Time vs. Non-Real-Time

Real-Time systems include wait states so they run at the same rate as time in the real world. This means that the code meant to run in a given time interval absolutely must complete within the time allotted for that interval.

When a control system I wrote for a steel furnace in South Caroline started throwing alarms for not keeping up I used the Performance Monitor program in Windows to determine that a database process running on the same machine would consume all the execution time for a couple of minutes a couple of times a day. This prevented the simulation from running at full speed which would have caused unwanted calculation and event-handling errors. I ended up being able to install the control code on a different system where it didn’t have to compete for resources.

Non-Real-Time systems can run at any speed. Fire-and-forget systems tend to run at full speed using all available CPU resources. Interactive simulations may run faster or slower than real-time. The computer game SimCity, for example, simulates up to 200 years of game activity in a few tens of hours of game play. Scientific simulations of nano-scale, physical events may simulation a few microseconds of real activity over dozens of hours of computing time.

Single-Platform vs. Distributed

Single-Platform simulations run on a single machine and a single CPU. This is typical of single-threaded desktop programs.

Distributed systems run across multiple CPUs or multiple machines. This arrangement invokes an overhead of communication and synchronization. I’ve written codes for several kinds of distributed systems.

The first time I encountered a multi-processor architecture was for the nuclear plant trainers hosted on Gould/Encore SEL 32/8000-9000 series computers. These systems featured four 32-bit CPUs that all addressed (effectively) the same memory.

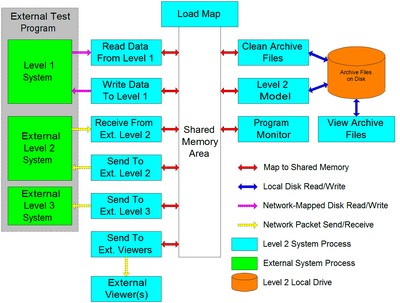

The next time was for the real-time control systems I wrote for industrial furnaces for the metals industry. They included two different kinds of distributed processing. One involved running multiple processes on a single, time-slicing CPU, that communicated with each other using shared memory. In some ways that was like using the Gould/Encore systems. The other kind of distributed processing involved communicating with numerous other computing systems in the plant, at least one of which was itself a simulation in some cases. This kind of architecture employed many different forms of inter-process communication. The diagram below describes this kind of system, which I implemented on VMS and Windows systems.

I wrote the communication code that integrated an interactive evacuation system with a larger threat detection and mitigation system written by other vendors. It used interface techniques similar to those described above.

I did the same thing for the integration of another evacuation simulation using the HLA or High-Level Architecture protocol. This technique is often used to integrate multiple weapons simulators into a unified battlefield scenario.

Deterministic vs. Stochastic

Deterministic simulations always generate the same outputs given the same inputs and parameters. They are intended to provide point results. The thermal and fluid simulation systems I wrote were all deterministic.

Stochastic simulations incorporate random elements to generate probabilistic results. If numerous iterations are run this type of simulation will generate a distribution of results. Monte Carlo simulations are stochastic.