I’ve seen a lot of different things over the years and they cannot help but suggest possibilities for ways things can be done in a more streamlined, integrated way. Of particular interest to me has been the many novel ways of combining ideas and integrating ideas to enhance the modern manufacturing process. It’s weird because many of these ideas are in use in different places, but not in all places, and there isn’t any place that uses all of them.

Let me walk through the ideas as they built up in chronological order.

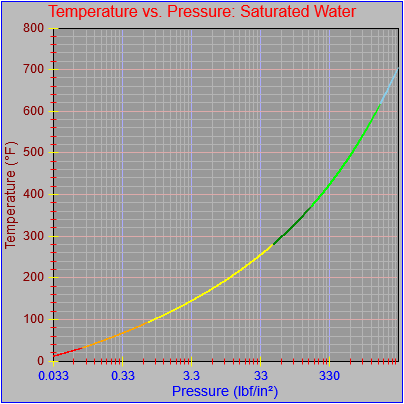

My first engineering job was as a process engineer in the paper industry, where I used my knowledge of the many characteristics of wood pulp and its processing to produce drawings and control systems for new plants, perform heat and material balances to help size equipment to achieve the desired production and quality targets, and perform operational audits to see if our installed systems were meeting their contractual targets and suggest ways to improve quality of existing systems (I won a prize at an industry workshop for best suggestions for improving a simulated process). I started writing utilities to automate the many calculations I had to do and used my first simulation package, a product called GEMS.

I traveled to a number of sites in far-flung parts of the U.S. and Canada (from Vancouver Island to Newfoundland to Mississippi) and met some of the control system engineers. I didn’t quite know what they did but they seemed to spend a lot more time on site than I did. I also met traveling support engineers from many other vendors. The whole experience gave me a feel for large-scale manufacturing processes, automated ways of doing things, and how to integrate and commission new systems.

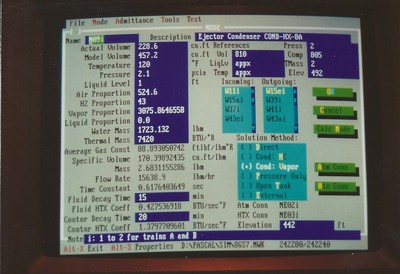

My next job involved writing thermo-hydraulic models for nuclear power plant simulators. I used a Westinghouse tool called the Interactive Model Builder (IMB), which automated the process of constructing fluid models that always remained in the liquid state. Any parts of a system that didn’t stay liquid had to be modeled using hand-written code. I was assigned increasingly complex models and, since time on the simulator was scarce and the software tools were unwieldy, I ended up building my own PC-based simulation test bed. I followed that up by writing my own system for cataloging all the components for each model, calculating the necessary constants, and automatically writing out the requisite variables and governing equations.

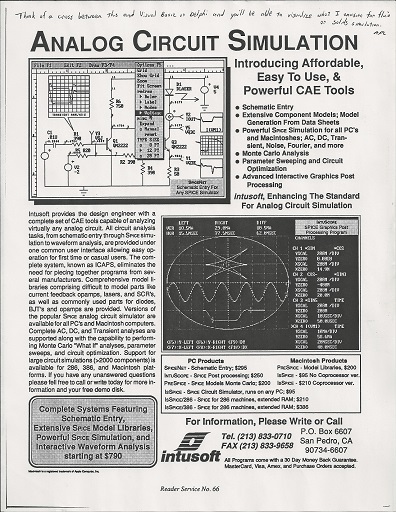

I envisioned expanding the above systems to automatically generate code, and actually started working on it, but that contract ended. Ideally it would have included a CAD-like interface for making the drawings. Right-clicking on the drawing elements would allow the designer to set the properties of the elements, the initial conditions, special equations, and so on. The same interface would display the drawing with live state information while a simulation was running, and the elements could still be clicked to inspect the real-time values in more detail. From a magazine ad for a Spice simulation package I got the idea that a real-time graph could also be attached to trace the value of chosen variables over time, right on the diagram.

My next job was supposed to be for a competitor of Westinghouse in the simulator space. I went to train in Maryland for a week and then drove down to a plant in Huntsville, AL, where I and a few other folks were informed that it was all a big mistake and we should go back home. I spent the rest of that summer updating the heating recipe system for a steel furnace control system.

My next job was with a contracting company that some of my friends at Westinghouse had worked for. Their group did business process reengineering and document imaging using FileNet (which used to be an independent company but is now owned by IBM). While doing a project in Manhattan I attended an industry trade show at the Javits Center. During that day all of the exhibits gave me the idea that a CAD-based GUI could easily integrate document functions like the FileNet system did. Instead of clicking on system elements to define and control a simulation, why not also click on elements to retrieve and review equipment and system documentation and event logs. Types of documentation could include operator and technical manuals, sales and support and vendor information, historical reports, and so on. Maintenance, repair, and consumption logs could be integrated as well. I’d learn a lot more about that at my next job.

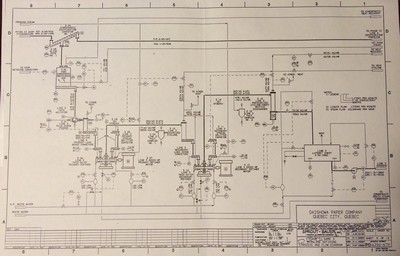

Bricmont‘s main business was building turnkey steel reheat furnace systems. There were specialists who took care of the steel, refractory, foundations, hydraulics, and so on, and when it came to the control systems the Level 1 guys did the main stuff PLCs and PC-based HMI systems running Wonderware and the like. As a Level 2 guy I wrote model-predictive thermodynamic and material handling systems for real-time supervisory control. Here I finally learned why the control system guys spent so much time in plants (and kept right on working at the hotel, sometimes for weeks on end). I once completed a 50,000-line system in three months, with the last two months consisting of continuous 80-hour weeks. I also learned many different communication protocols, data logging and retrieval, and the flow of material and data through an entire plant.

Model-predictive control involves simulating a system into the future to see if the current control settings will yield the desired results. This method is used when the control variable(s) cannot be measured directly (like the temperature inside a large piece of steel), and when the system has to react intelligently to changes in the pace of production and other events.

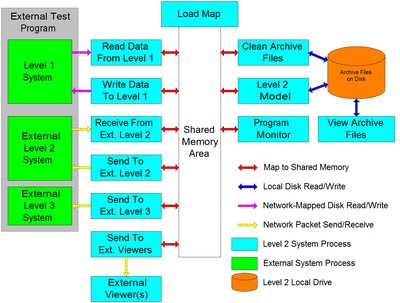

The architecture of my Level 2 systems, which I wrote myself for PC-based systems and with a comms guy on DEC-based systems, looked roughly like this, with some variations.

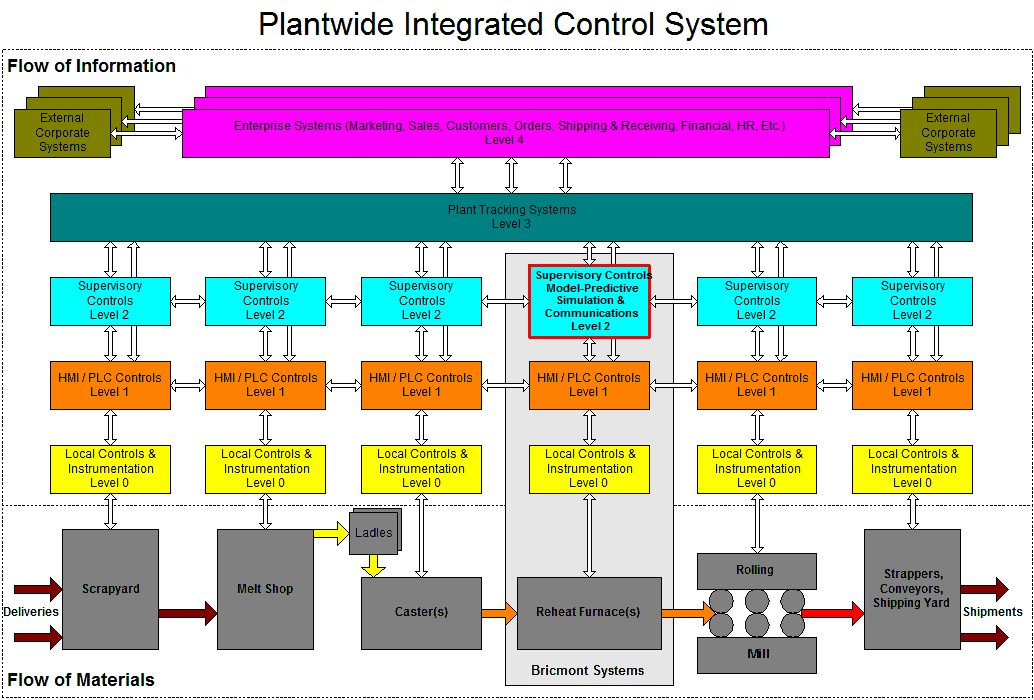

This fit into the wider plant system that looked roughly like this:

The Level 1 layer was closest to the hardware and provided most of the control and operator interaction. The Level 2 layer incorporated supervisory controls that involved heavy calculation. Control room operators typically interacted with Level 2 systems through the Level 1 interface, but other engineers could monitor the current and historical data directly through the Level 2 interface. Operational data about every batch and individual workpiece was passed from machine to machine, and higher-level operating and historical data was passed to the Level 3 system, which optimized floor operations and integrated with what they called the Level 4 layer, which incorporated the plant’s ordering, shipping, scheduling, payroll, and other functions. This gave me a deep understanding of what can be accomplished across a thoughtfully designed, plant-wide, integrated system.

I also maintained a standardized control system for induction melting furnaces that were installed all over the world. That work taught me how to internationalize user interfaces in a modular way, and to build systems that were ridiculously flexible and customizable. The systems were configured with a simple text file in a specified format. While I was maintaining this system I helped a separate team design and build a replacement software system using better programming tools and a modern user interface.

My next job involved lower level building controls and involved mostly coding and fixing bugs. It was a different take on things I’d already seen. I suppose the main thing I learned there was the deeper nature of communications protocols and networking.

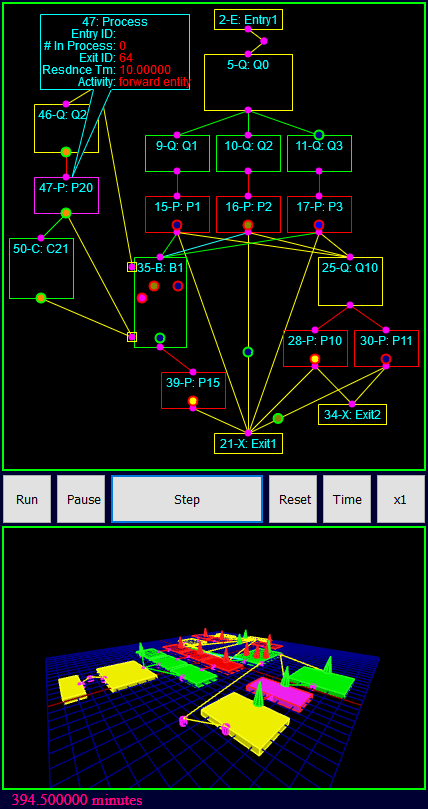

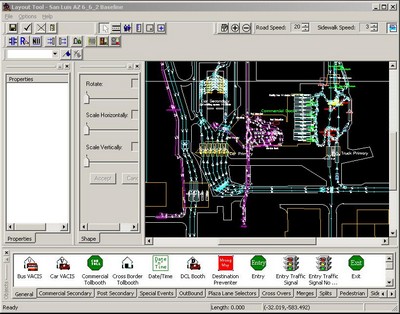

My next job, at Regal Decision Systems, gave me a ton of ideas. I built a tool on my own to build models of the operation of medical offices. I learned discrete-event simulation while doing this, where up to that point I had been doing continuous simulations only. I also included the ability to perform economic calculations so the monetary effects of proposed changes could be measured. The company developed other modeling tools for airports, building evacuations, and land border crossings, some of which I help design, some of which I documented, and many of which I used for multiple analyses. I visited and modeled dozens of border facilities all over the U.S., Canada, and Mexico, and the company built custom modeling tools for each country’s ports of entry. They all incorporated a CAD-based interface and I learned a ton about performing discovery and data collection. Some of the models used path-based movement and some of them used grid-based movement. The mapping techniques are a little different but the underlying simulation techniques are not.

The path-based model included a lot of queueing behaviors, scheduling, buffer effects, and more. All of the discrete-event models included Monte Carlo effects and were run over multiple iterations to generate a range of possible outcomes. This taught me how to evaluate systems in terms of probability. For example, a system might be designed to ensure no more than a ten-minute wait time on eighty-five percent of the days of operation.

I learned a lot about agent-based behaviors when I specified the parameters for building evacuation simulations. I designed the user interfaces to control all the settings for them and I implemented communications links to initialize and control them. Some of the systems supported the movement of human-in-the-loop agents that moved in the virtual environment (providing a threat or mitigating it and providing security and crowd control) among the automated evacuees.

At my next job I reverse-engineered a huge agency staffing system that calculated the staffing needs based on activity counts at over 500 locations across the U.S. and a few remote airports. I also participated in a large, independent VV&A of a complex, three-part, deterministic simulation designed to manage entire fleets of aircraft through their full life cycle.

The main thing I did, though, was support a simulation that performed tradespace analyses of the operation and logistic support of groups of aircraft. The model considered flight schedules and operating requirements, the state and mission capability of each aircraft, reliability engineering and the accompanying statistics and life cycles, the availability of specialized support staff and other resources, scheduled and unscheduled maintenance, repair or replace decisions, stock quantities and supply lines, and cannibalization. The vendor of the programming language in which the simulation was implemented stated that this was the largest and most complex model ever executed using that language (GPSS/H, the control wrapper and UI was written in C#). I learned a lot about handling huge data sets, conditioning noisy historical input data, Poisson functions and probabilistic arrival events, readiness analysis, and more.

More recently I’ve been exploring more ideas about how all these concepts could be combined in advanced manufacturing systems as follows.

Integration Ideas

- A common user interface could be used to define system operation and layout. It can be made available through a web-based interface to appropriate personnel throughout an organization. It can support access to the following operations and information:

- Real-time, interactive operator control

- Real-time and historical operating data

- Real-time and historical video feeds

- Simulation for operations research and operator training

- Operation and repair manuals and instructions (document, video, etc.)

- Maintenance events and parts and consumption history

- Vendor and service information

- Operational notes and discoveries from analyses and process-improvement efforts

- Notification of scheduled maintenance

- Real-time data about condition-based maintenance

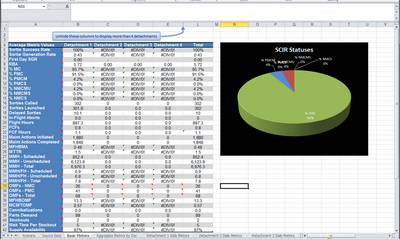

- Management and worker dashboards on many subjects

- Information about the items processed during production can also be captured, as opposed to information about the production equipment. In the steel industry my systems recorded the heating history and profile of every piece of steel as well as the operation of the furnace. In the paper industry plants were later to adopt this kind of tracking (which is more difficult to do because measurements involve a huge amount of manual lab work) but they treat the knowledge like gold and hold it in high secrecy. Depending on the volume of items processed the amounts of data could get very large.

- Most six sigma data is based on characteristics of the entities processed. This can be collated with equipment operating data to determine root causes of undesirable variations. The collection of this data can be automated where possible, and integrated intelligently if the data must be collected manually.

- Model-predictive simulation could be used to optimize production schedules.

- Historical outage and repair data can be used to optimize buffer sizes and plan production restarts. It can also be used to plan maintenance activities and provision resources.

- Look-ahead planning can be used to work around disruptions to just-in-time deliveries and manage shelf quantities.

- All systems can be linked using appropriate, industry-standard communications protocols and as much data can be stored as makes sense.

- Field failures of numbered and tracked manufactured items (individual items or batches) can be analyzed in terms of their detailed production history. This can help with risk analysis, legal defense, and warranty planning.

- Condition-based maintenance can reduce downtime by extending the time between scheduled inspections and also preventing outages due to non-graceful failures of equipment.

- Detailed simulations can improve the layout and operation of new production facilities in the design stage. Good 3D renderings can allow analysis and support situational awareness that isn’t possible through other means.

- Economic calculations can support cost-benefit analyses of proposed options and changes.

- Full life cycle analysis can aid in long-term planning, vendor relationships, and so on.

- Augmented reality can improve the speed and quality of maintenance procedures.

The automotive and aircraft industries already do many of these things, but they make and maintain higher-value products in large volumes. The trick is to bring these techniques to smaller production facilities producing lower-value products and in smaller volumes. The earlier these techniques are considered for inclusion the more readily and cheaply they can be leveraged.