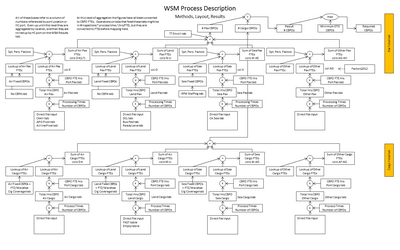

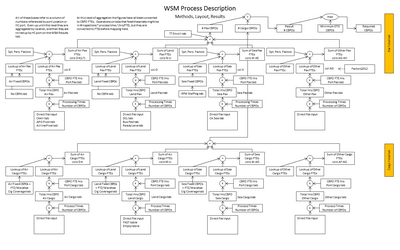

I'm currently building a modular, discrete-event simulation framework in JavaScript, that will ultimately be joined to a back end storage mechanism. This is appropriate to conduct many forms of process analysis, operations research, and As-Is / To-Be comparisons.

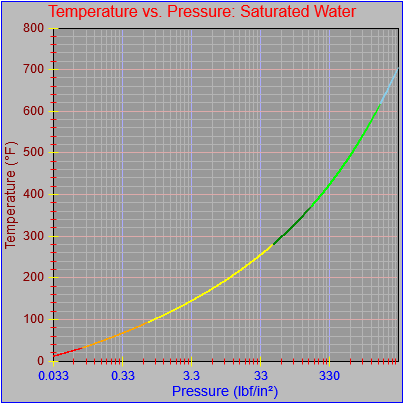

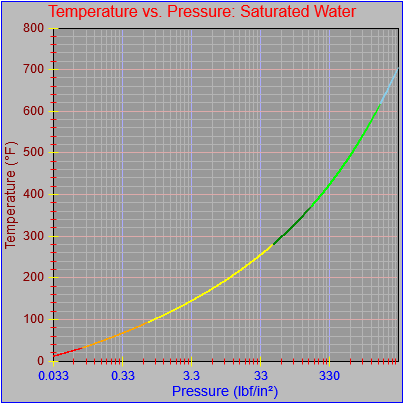

I'm currently building a graph widget that will become an includable, standalone unit. Just about every aspect of the element is configurable and it automatically sizes all of the elements per the settings. The goal is to support a wide range of plot types, user editing, and animated scrolling horizontally and vertically.

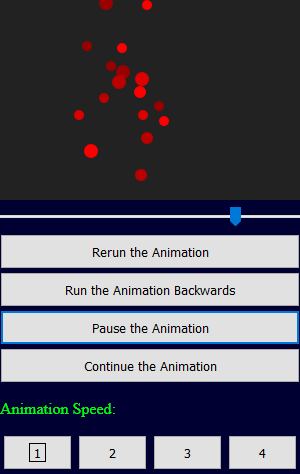

I'm currently building an HTML animation widget to perk up the presentation of web pages. There are almost certainly better ways to do this but it's an interesting, ongoing project.

Dates, events, and details at the link.

I've designed and implemented systems and parts of systems for a wide variety of applications in multiple industries. I've worked in all capacities as part of large and small teams and as a sole practitioner. I've developed enterprise-level business process automation systems, systems for real-time industrial control, real-time operator training, large- and small-scale analytics, and design and decision support. I've developed tools which improve the accuracy, robustness, and speed of development of all of these as well as the delivered systems. I've designed, implemented, tested, delivered, and maintained software on a project, program, and product basis.

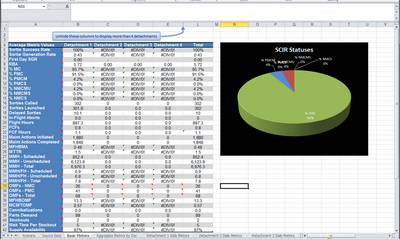

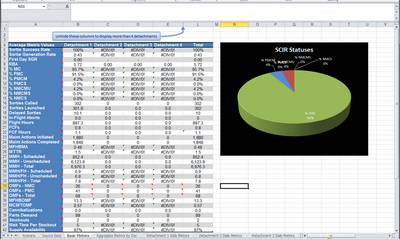

I served as the business analyst and Product Owner for this project, reverse-engineering an existing spreadsheet-based tool used to justify tens of thousands of staff deployed across the country by a major government agency. I served as the liaison between the customer and our in-house technical team, identifying and negotiating requirements, reviewing and verifying data sources and methods, testing software, soliciting feedback, and making presentations.

I was able to leverage many years of experience of working in the relevant field environmants, collecting data, building models, performing analyses, and making recommendations, but continued to learn from headquarters staff and management as we prepared to extend our replacement software to other major areas of activity using other very different bases of calculation.

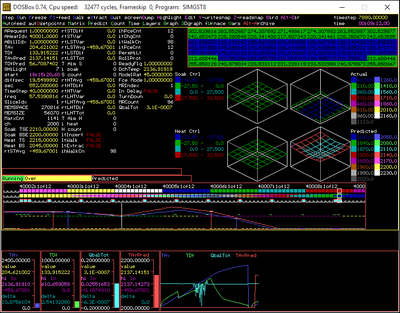

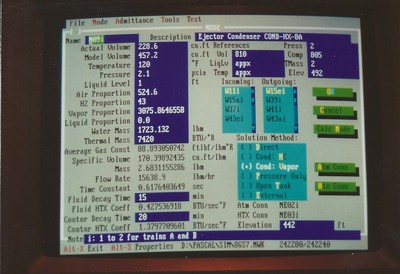

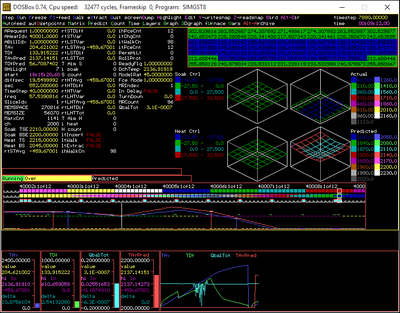

I wrote Level 2 supervisory control systems for steel reheat furnaces in many different configurations. They involved different shapes and sizes of steel to be heated (thin slab, thick slab, square or rectangular billet, round bar, beam blank), different ways of moving the steel through the furnace (rolling, walking, pushing), and different "views" of the steel (top only, top and bottom, top and sides, all sides).

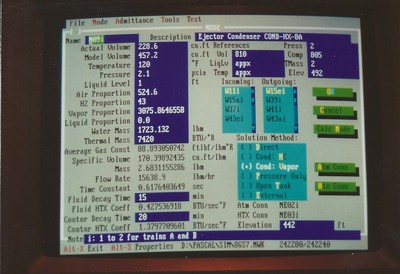

The PLC-based Level 1 systems did the low-level I/O and detailed control while the Level 2 system read in data about piece data, movement events, furnace zone temperature, and control setpoints and ran simulations to calculate current and discharge workpiece temperatures that could not be measured. The system would then calculate new zone temperature setpoints, and sometimes additional movement parameters, and send those back to the Level 1 system to use in place of the manually-entered setpoints.

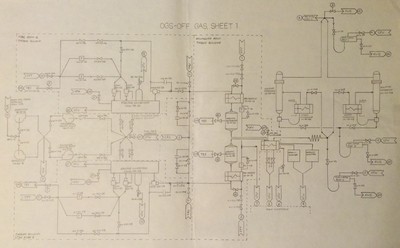

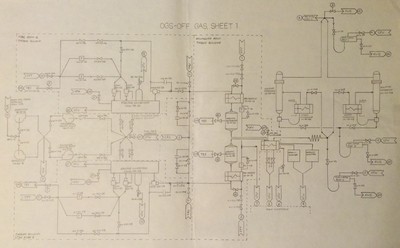

I worked as part of large teams to write thermo-hydraulic simulations of fluid systems in nuclear power plants. These were used to drive full scope, site-specific, operator training simulators.

These simulators were complete replicas of all control panels found in each plant with every physical and informational control and indicator. Like a flight simulator, the idea is to let operators practice responding to unusual and emergency situations without risking plant safety.

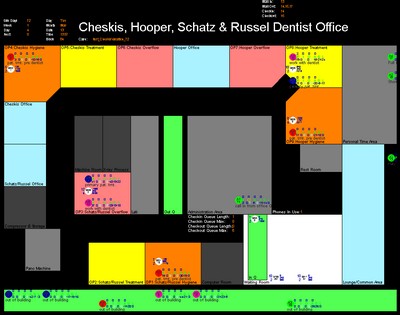

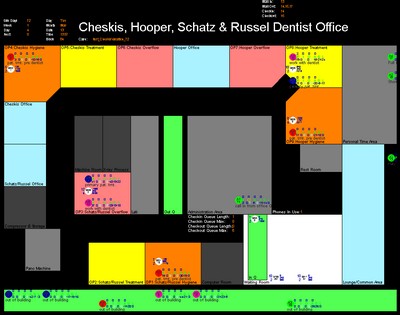

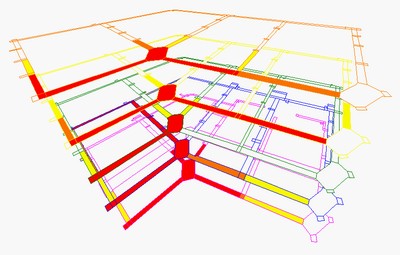

I built a tool not to simulate a single office, but to build a representation of any kind of operation in a flexible, modular way. It included ways to specify an office layout, schedules for patients, staff, rooms, and special equipment, and rules for procedures and event durations.

The simulation considered all of the administrative activity (phone calls in and out, taking and changing appointments, communicating with third parties including insurance companies) as well at treatment, break, and support activity by different types of personnel. The model simulated time and materials and costs for every activity so the economic impact of every proposed change could be evaluated.

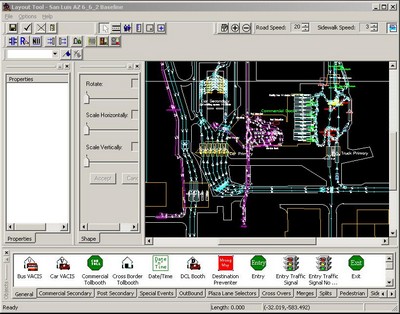

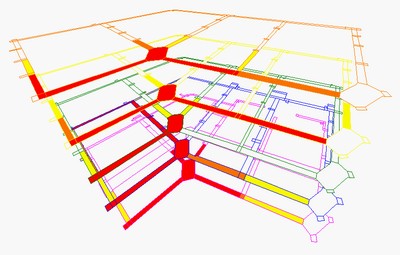

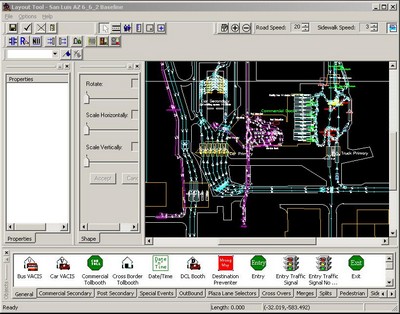

I used this and two companion tools for analysis and design of land border crossings all over the United States, Canada, and Mexico. I was a lead on the Systems Analysis team that collected data at scores of sites and built models that could be used to perform analyses.

The original tool was called BorderWizard but Customs and Border Protection got together with the governments of Canada and Mexico to have us create companion tools called CanSim and SimFronteras. I did a lot of discovery, data collection, and design for those efforts, including producing all the documents outlining requirements, user instructions, and data collection methods.

For a while this was referred to as the "Dream Team." I performed a lot of independent analyses using this tool. I also attended conferences and even used the tool to verify and approve the design of the new St. Stephen crossing built at Calais, ME.

Over the course of jobs in the paper, nuclear, and steel industries I got a career's worth of education about continuous simulation in general and thermodynamics and fluid mechanics in particular, along with a lot of experience in numerical methods (and software engineering... and computer graphics...).

I did a lot of things by hand and used a commercial program called GEMS early on but quickly learned how to do everything in code, not only stick-building simulation codes but building tools to automate the design, documentation, and construction of them.

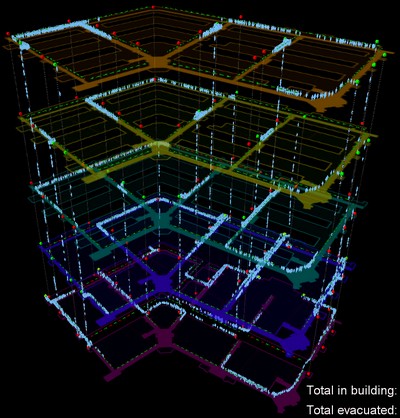

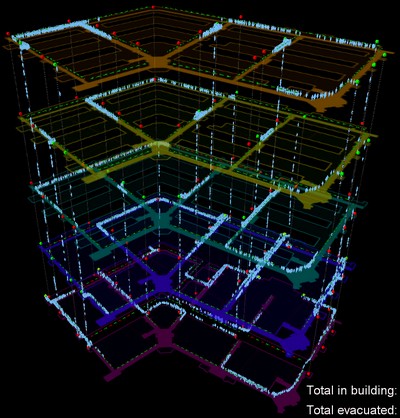

I worked with a team that built a number of grid-based tools used to simulate the movement of people through airports, train stations, consulates, and buildings. I got very involved with all of the evacuation-based tools as a technical PM and Product Owner. I also specified the parameters that would be used to define behaviors within the simulation and mocked up the GUI concepts needed to set them.

The evacuation tools routed around threats, modeled communication between entities and behaviors like fear, abstracted obstacles so details didn't have to be modeled too closely, and included queues and security for ingress management as well as evacuations. One of the products read in building layouts in a standard BIM format and applied grids automatically.

Two of the evacuation products included interactive, real-time components, which is where my experience came to the fore. One was an automated plug-in to an interactive training system that functioned like a multi-player video game. The actions of different "players" were coordinated using the HLA (High Level Architecture) protocol. Buildings would be evacuated in response to threats and the plug-in would manage the movement of large numbers of people out of a building or area without intervention from the players.

Another project, on which I served as co-PM, had a multi-company team that built an integrated system that sensed certain kinds of threats, managed the internal space to mitigate the threat, predicted the evolution of the threat (and its mitigation), determined an optimized series of routings out of the building, and simulated the movement of the occupants according to the optimized routing solution. The system was real-time in the sense that it did the sensing, prediction, optimization, and control as an event happened, and gave users a way to enter update threat and movement information (and generate a new solution) based on information received during the event. The system also controlled lights and indicators within the building that served as guides directing the building's occupants along the most efficient routes toward the exits.

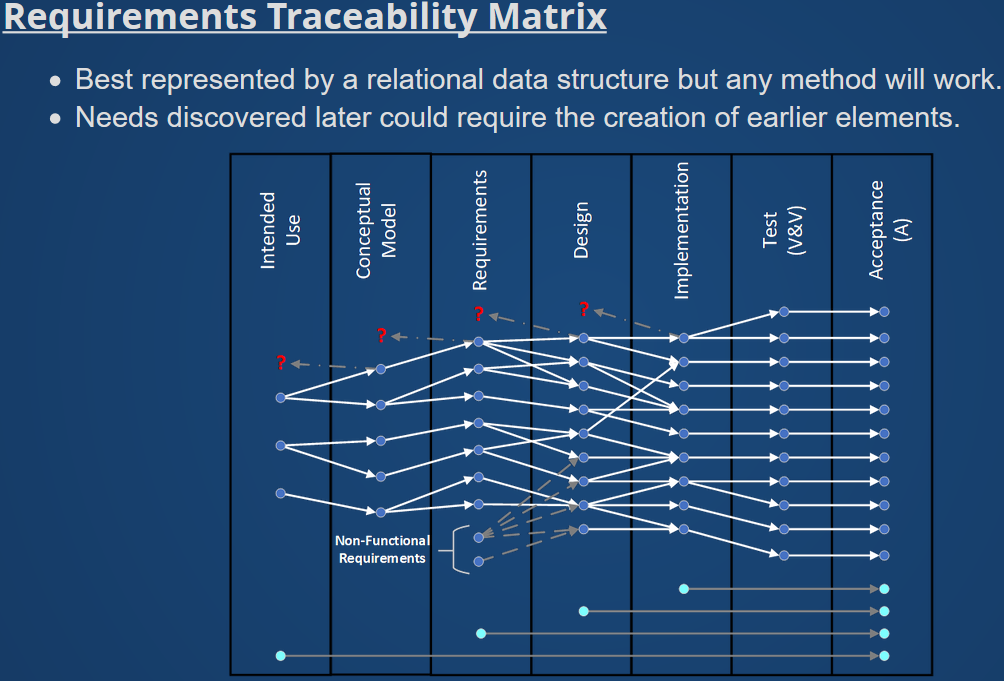

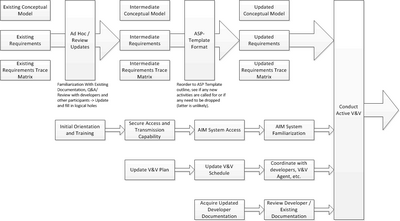

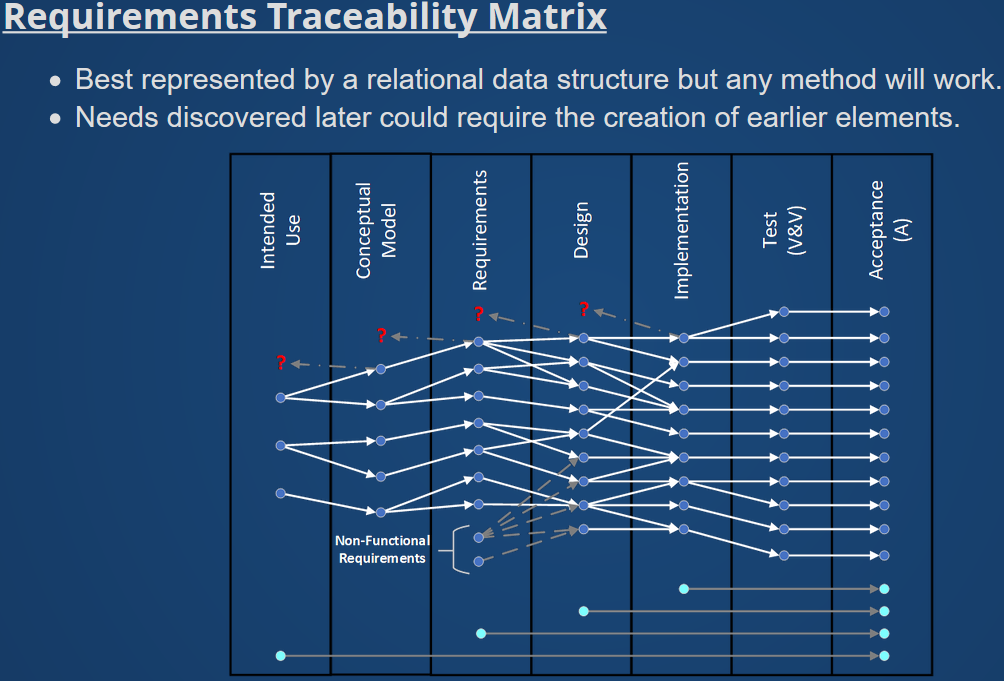

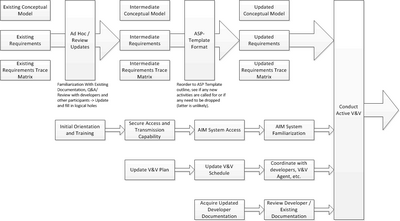

I've performed all manner of test and acceptance procedures over the years and gotten many systems through formal commissioning processes. At my last company I was often involved in formal V&V on both a project and program basis to win accreditation for simulation products used in high-level decision support.

We maintained a full set of user, technical, and management documentation for our maintenance and logistics simulation. This was updated at intervals as methods and interfaces were updated. We also completed a formal, two-year contract to conduct an independent V&V for a secure, web-based system intended streamline and improve the management of entire fleets of aircraft for the Navy and Marine Corps.

I worked on numerous systems over the years that involved interactive, real-time communication between processes, though usually not directly to hardware devices. However, I worked in two environments that involved direct communication with hardware at a very detailed level. Both were written in Microsoft C++.

One environment was the off-the-shelf, configurable control program a parent company supplied with its induction melting furnaces. This program included a simple GUI, all of the control and archiving functions, and direct, low-level communication with numerous pieces of hardware through serial communications.

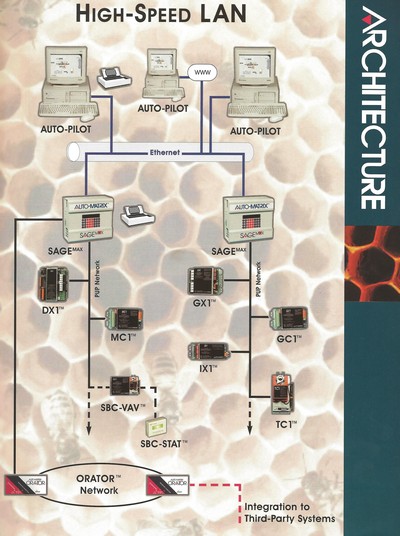

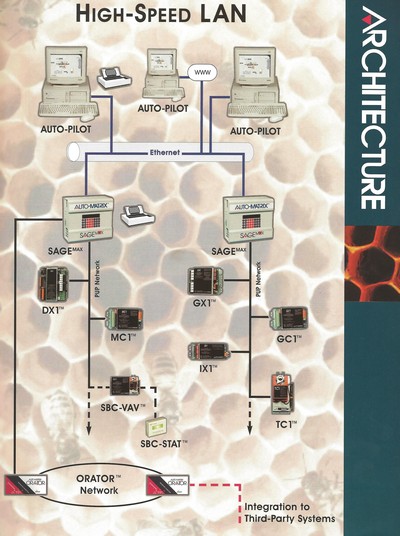

The other environment was the driver DLL our company supplied with its own line of HVAC control products. This involved serial communications with a range of devices in the company's published information protocol and also interrupt-driven management of TCP/IP information at the packet level.

I always look for opportunities to automate processes to improve their speed, accuracy, consistency, and robustness. This has led me to create numerous tools in the course of my formal work creating software, systems, analyses, and recommendations for direct customers. The product delivered to end-users is sometimes a tool itself but that has been comparatively rare. Most of my work in this area has been to improve and standardize internal working processes for myself and my colleagues. If I see a time-consuming, error-prone, inflexible process there's a good chance I'm going to build a tool to make things better.