On Wednesday I attended a Meetup hosted by Pittsburgh Code & Supply. This particular event was hosted by Brian Kardell (see also here) and was formally titled with the prefix “[Chapters Web Standards]:” I think to indicate that the talk is part of a series or a larger effort.

The talk itself (see slides here) was delivered remotely from Denmark by Kenneth R. Christiansen, who works for Intel on web standards.

Here is the current working draft standard for the Generic Sensor API.

I drove up to Pittsburgh to see the talk because of my experience working with real-time, real-world systems that included sensors and actuators. I had even run into some of the specific issues discussed when I was experimenting with Three.js and WebGL in preparation for the talk I gave at CharmCityJS. I wrote about my investigations here.

That preamble out of the way, the presentation was really interesting. It was also very dense and delivered very quickly. The speaker demanded a lot of his audience as he made it through all 67 slides in less than an hour. This worked because the audience probably self-selected for interest in the subject and because the slides are posted here.

The talk described efforts to create a standard way of exposing and accessing sensors of various kinds. Many of these are built in to the devices themselves (like the accelerometers in three axes built into handheld devices like phones that allow sensing of orientation and other things) but the talk also described how to incorporate sensors built into external devices. One example involved an external Arduino board connected through the Chrome browser’s Bluetooth API as shown here:

I had known about the Bluetooth API but learned there was also a USB API, which also seems to be implemented only on Chrome.

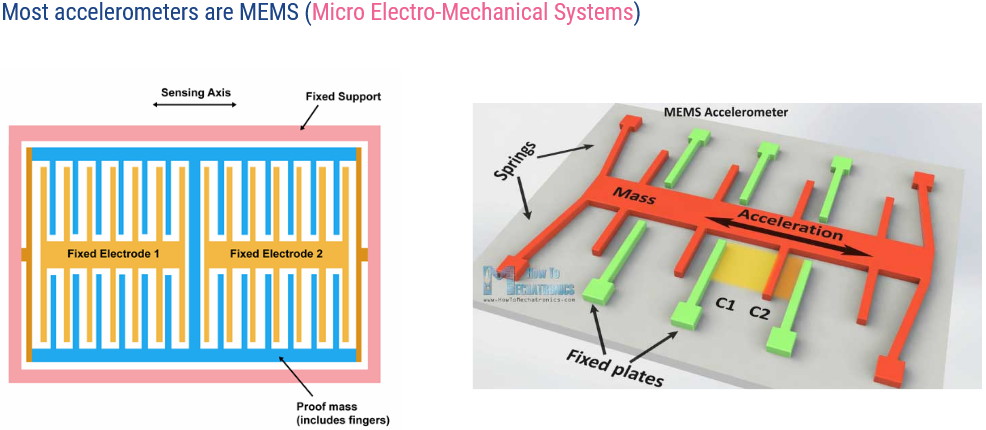

The talk included a number of highly informative graphics. The illustration of how accelerometers work was a classic case of a picture being worth a thousand words. I’d never taken the time to think about how they worked but Ken’s 25th slide led to an immediate “aha!” moment.

Subsequent images showed how gyroscopes and magnetometers work with similar verve.

The most interesting parts of the discussion involved derived and fusion sensors, with the latter being fusions of physical sensors into unified, abstract sensors implemented in code. (See slides 14 and following.) Several examples were given about how fusion is accomplished, including text and code.

The talk went into security concerns, which is obviously important.

I had also never heard the term “polyfill” before. It refers to code that implements features found in some browsers in other browsers that do not support those features.

The Zephyr.js project, which implements a limited, full-stack (Node.js-based) version of JavaScript on small, IOT-type devices (like Arduino boards), was referenced, as well as the Johnny Five project, which does something similar. I’ve been playing around with Arduino kits a little bit and am looking forward to trying these.

I haven’t included a huge amount of text here but have probably set a new record for links. This is an indication that I found the talk hugely satisfying. It should provide a lot of food for thought going forward.