Operations Research Analyst / Data Analyst

I did some light operations research analysis in the 1990s but took up the profession fully starting in 2002. I began with a major emphasis on building simulation tools but eventually spent as much or more time performing analyses and making recommendations for improvements.

As much as I carried out numerous studies and generated numerous results the thing I and the companies I worked with did not usually do was perform formal optimizations. My activities were more in the realm of Lean analysis which looks to rearrange, substitute, or reconfigure processes to achieve improved results. The real-time evacuation guidance and threat detection system incorporated an optimization module, but it was produced and managed by my co-PM and I didn't have much to do with it. If you're wondering, it was written in LINDO.

I performed a wide variety of analyses based on simulation and various ad hoc techniques. I definitely worked with large data sets and performed, programmed, and leveraged a wide range of statistical functions in code and in (Excel) spreadsheets.

I worked with input data of various kinds, usually randomly varying data that had to be characterized so it could be used in Monte Carlo operations. The continuous simulations I did were always deterministic and involved properties (volume, elevation, length, cross-sectional area), relationships, and functions (e.g., density as a function of pressure, thermal conductivity as a function of temperature) that were known. Stochastic simulations used combinations of variables that varied randomly within a range (and with a specific distribution or "shape"). The input data were usually in the form of collections of data points that formed a distribution within a specified range of error. It was usually necessary to run multiple iterations to generate a range of possible results.

Input data sets were often dirty and needed to be processed to be made usable. Event descriptions sometimes included multiple data points and the holes could be filled in from context. For example, records of historical flight data might include airframe tracking number, departure time, departure location, destination, flight duration, mission type, and equipment. If surrounding data make it clear that the aircraft was in a given location over time, a missing or incorrect entry in this field can be corrected. Other fixes are possible in different situations. It was important to understand that collection of perfectly accurate data to be used by pointy-headed analysts at home would usually take a back seat to operational imperatives. It's not that steps shouldn't be taken to reduce errors at the source, but that is probably as it should be.

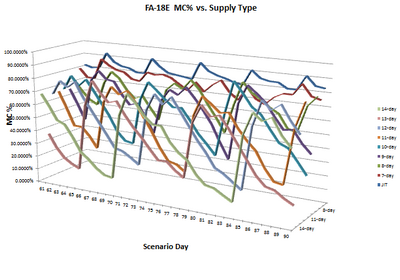

The thermodynamic, fluid, and material handling simulations I did generated point answers (although complex systems could generate complex and unexpected behaviors). I sometimes performed parametric studies but a given set of inputs led to a specific set of outputs. The operations research simulations generated a range of results and provided insight into the expected results along with the best-case and worst-case possibilities. Investigators were usually most concerned with the worst-case edge of the envelope.